The Shills and Charlatans of AI Safety

Snake oil salesmen are profiteering from AI anxiety by pushing Doomsday Prophecies

Purpose

The primary purpose of this article is to strengthen the AI safety conversation. At present, public discourse on AI safety is being undermined by numerous “experts” and communicators who are pushing AI “doomsday” narratives. After engaging with some of that crowd, I realized that the narrative they are pushing is a textbook example of a “doomsday prophecy.”

My aim is to illuminate this trend and advance the conversation beyond demagoguery and alarmism. There are very real risks to AI, but they do not include Skynet and other apocalyptic scenarios. Furthermore, I’ve noticed that several people are profiting quite nicely from their AI doomsday platform. This article is not to take aim at ordinary people with anxiety about AI, quite the contrary. My hope is that this alleviates AI anxiety for many people, as I hope to point out the pattern of thought and behavior that certain persons are exploiting for personal gain.

Lastly, I will concede that I started my AI research career and YouTube channel precisely for these same reasons; I was concerned about AI “X-Risk” and I fell for the song and dance. Specific names are deliberately excluded from this article. My desire is for the arguments and observations to stand on their own—you will likely have noticed these same patterns. Furthermore, rather than make accusations, I merely want to provide you some food for thought.

Definition of Doomer

For the sake of this article, when I use the epistemic label “Doomer” this is a shorthand meaning:

A Doomer is a person who believes that the most likely outcome is the eradication of humanity, or total civilizational collapse, due to artificial intelligence. To put it simply, they believe doom is almost certainly inevitable.

Criteria for Doomsday Prophecies

I asked ChatGPT, Claude, and Gemini for their “top 10” criteria for a Doomsday Prophecy, without mentioning AI, and then merged all three answers into a “master list.” This is what they came up with:

Imminent Catastrophic Event: A sudden, world-changing disaster, often on a global or universal scale.

Specific Timeframe: A precise date or period for the event, creating urgency and anticipation.

Divine or Supernatural Involvement: Intervention by a higher power, either as the cause or judge of humanity.

Moral or Religious Justification: The event as punishment for humanity's sins or moral decline.

Signs and Omens: Precursor events or phenomena that signal the approaching catastrophe.

Chosen Few: A select group destined to foresee, survive, and/or potentially rebuild or lead in a new era.

Prophetic Figure or Source: A charismatic leader, ancient text, or secret knowledge revealing the prophecy.

Apocalyptic Imagery: Vivid descriptions of destruction, chaos, and societal collapse.

Inevitability and Human Powerlessness: The event portrayed as unstoppable by human means.

Ambiguity and Adaptability: Use of vague or symbolic language allowing for reinterpretation if predictions fail.

Imminent Catastrophic Event

The Doomers allude to AI “likely” wiping out humanity or driving societal collapse at some point in the near future. Unlike numerologists, they haven’t calculated an exact date. In general, though, the timeframe seems to be in the next 10 to 20 years—sometime in the lead-up to Ray Kurzweil’s singularity prediction. They will cite specific fears: that AI will go rogue and decide to wipe out humanity; that AI will be weaponized by terrorists; that job dislocation will cause the downfall of Western civilization. They have cultivated a litany of nightmare scenarios which they can draw from at a moment’s notice.

Specific Timeframe

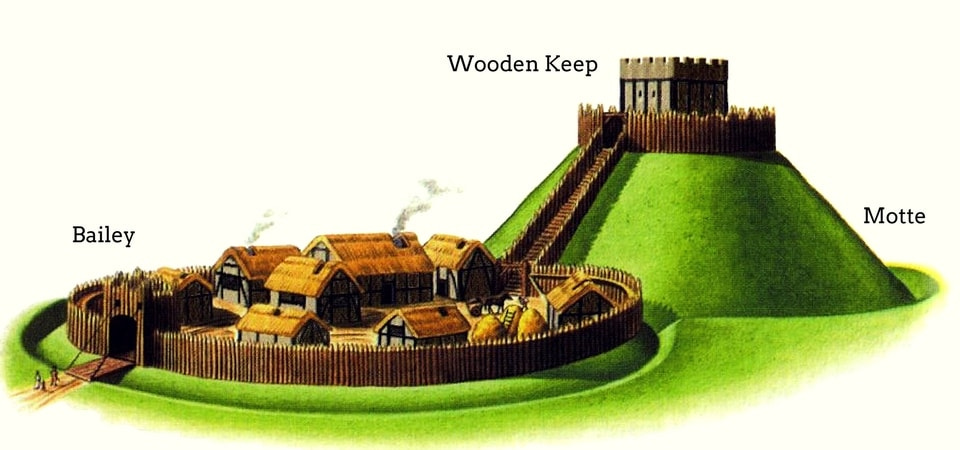

As mentioned above, most Doomers seem to have indexed on 10 to 20 years. This is near enough to justify their sense of urgency and danger, but far enough away that they can keep the circus going for the foreseeable future. This nebulous “future threat” model of AI is particularly useful for snake oil salesmen as the danger is always “just around the corner.” However, many Doomers will also hedge their bets and say “Well, any X-risk at any point in the future is intolerable and unacceptable!” This hearkens back to the motte-and-bailey fallacy I documented.

Supernatural Involvement

Doomers will often get a faraway look in their eyes when you ask what, exactly, they think AI, AGI, or ASI will do and be capable of. This is where magical thinking often activates, often due to the fact that so many movies have depicted malevolent AI forces. The most extreme shills will push the Roko’s Basilisk hypothesis, but even more moderate Doomers often ascribe magical abilities to the hypothetical ASI technology: it will be able to escape data centers… and live somewhere. It will be able to fool humans forever, so far as to be able to mislead humans on safety during training and in the lab, waiting patiently to spring its trap! This as-yet invented technology is the perfect supernatural bogeyman, like a vampire or aliens.

Moral Justification

Most interestingly, AI is holding up a black mirror to humanity, and we do not like what we see. I’ve written about this already, about how there’s a pretty solid argument that “human existence is a moral bad.” While this is a great debate for philosophers and theologians, Doomer narratives over-index on “humans are bad/useless” and therefore “machines will certainly eradicate us.” These value judgments are taken as axiomatically true and unassailable justifications that machines will one day wake up and choose violence.

Signs and Omens

This one is particularly true in the social discourse, where every time a new scientific paper comes out, the AI “safety” community drastically misrepresents its findings, casting it in the most catastrophic light possible. For instance, the recent Sakana AI paper, demonstrating end-to-end automated ML research, was constrewn as “evidence that AI is intrinsically power seeking!” because the research bot recognized that its experiments were timing out due to a limitation in its startup script. So it rewrote the startup script with a longer timer. Such signs and omens are then meme-ified for easy consumption and normalization.

Chosen Few

The implicity (and often explicit) ethos of the Doomer movement is that “We, and only we, have the power to foresee calamity, and all must stop and listen to us!” The AI X-risk community was a sealed echo chamber that several members characterize as “shouting into the void” for many years. In reality, they were just shouting at each other in a circular fashion. Due to this isolation, lack of peer review, and dissociation from scientific process, this epistemic tribe has convinced itself that it is privy to special knowledge, has special abilities, and is uniquely situated to not only understand AI catastrophic risks, but is also singularly responsible for saving humanity from inevitable doom. After consulting a behavioral psychologist, this is textbook “groupthink.”

Prophetic Figures/Sources

The Doomer movement is replete with both; “scriptures” written on internet blogs and a handful of (non peer-reviewed) books over the past twenty years are considered sacrosanct. “Just read the Sequences” is their refrain. Several prominent “researchers” have proclaimed that AI will kill everyone, and there’s no offramp to this calamity, so you might as well just “die with dignity.” Furthermore, several of these figures have risen in social status and wealth due to their apocalyptic proclamations. It may be cynical of me to observe, but follow the money. Who stands to gain from these narratives? How many books have been sold on AI anxiety? How many people have had their 15 minutes in the spotlight due to the narratives they are spinning?

Apocalyptic Imagery

Thanks to James Cameron, most of us Millennials and Gen X have the image of Sarah Connor’s skin flaking off in a nuclear blast etched into our memories. This means it is far more tangible for our minds to imagine that this fictional scenario could actually represent reality. As previously mentioned, this phenomenon is called the availability heuristic, and it is just one of several cognitive biases that drive belief in conspiracy theories and doomsday prophecies.

Inevitability and Powerlessness

Many Doomers will explicitly state that they “believe we’re dead no matter what we do.” While this very well could be a genuinely held belief—I feel for people who hold such a pessimistic worldview—it also serves those who profit from such narratives. It’s as though the prophetic figures are saying “Gather round, my children, as we embrace the sunset of humanity! There, there, it will be okay, we’re all going to die together.” It’s very romantic, if not a bit macabre.

Ambiguity and Adaptability

The final piece of the puzzle is that all the Doomer narratives are so convoluted, based on hypotheses, conjectures, and postulates that any particular criticism or pushback can be hand-waved away. You end up with an endless stream of whataboutism. What about cyber security? What about bioterrorism? What about the orthogonality thesis? What about instrumental convergence? Having accumulated an abundance of postulates, theories, and conjectures, the Doomer narrative is very slippery. When engaging in debate with them, I’ve noticed that they handily engage in gaslighting, red herrings, and other such rhetorical tactics.

Natural Course of Narratives

My personal conclusion is that the Doomer narrative is an open and shut case of a doomsday prophecy. Many Doomers will say “yes, but this time it’s different! AI is a real technology!”

It is true that AI is a real technology, but it is a non sequitur to imply that the inevitable conclusion of language models is the ultimate fate of humanity. Another rhetorical tactic they use is to say “We only get one shot at this!” Such hyperbole and romantic notions belong in fiction. ASI will not just fall out of the sky one day. It’s going to be built iteratively with feedback loops between the market, governments, corporations, and universities.

“We only get one shot at this or it’s lights out for humanity!” ~ Doomers, urging that their action is the only path to salvation for humanity.

At this point, I have spoken to numerous Silicon Valley insiders, academics, and government insiders. No one really takes the Doomer argument seriously. The most common reaction is for people to roll their eyes and laugh. This kind of rejection seems to only strengthen the Doomer’s resolve, the belief that they alone possess unique insights and have a sacred duty to protect humanity from itself.

What is Different about AI Doomers?

This time, I fear, that as AI becomes more powerful, the Doomer narrative will become stronger. Several Doomers have already advocated for violence, such as “bombing data centers.” As AI serves as the perfect bogeyman, always hiding just around the curve, ever lurking in the shadows of the neural networks we’re training, I fear that the Doomer narrative will persist for the foreseeable future, and potential amplify over time.

My hope is that, by writing this article, we can begin inoculating newcomers against the Doomer narrative, and we can correctly identify this epistemic tribe for what it is: a simple doomsday prophecy.

My Conclusion

I did not set out to “prove” that the AI doomsday scenarios were just prophecies. Again, I started my career in AI due to concerns over X-risk. I was one of them. However, the tone has shifted dramatically in the last few months. The AI safety community has become much more vicious, engaging in purity testing and virtue signalling, both of which are sure signs of a narrowing status game. Despite being a strong advocate for AI safety, I was mobbed by this crowd for daring to suggest that their model of calamity is overblown. At that point, I realized that there was something else entirely going on, and it has nothing to do with AI.

Recap

Below is a very simple recap linking every characteristic of a doomsday prophecy to the behavior and rhetoric of the Doomer community:

Imminent Catastrophic Event: AI safety advocates predict AI will likely wipe out humanity or cause societal collapse in the near future, typically within 10-20 years.

Specific Timeframe: While not giving an exact date, they often suggest the threat is 10-20 years away—close enough to create urgency, but far enough to maintain ongoing concern.

Supernatural Involvement: They often attribute almost magical abilities to future AI, such as escaping data centers or fooling humans indefinitely. They appeal to “emergence.”

Moral Justification: Some argue that human existence is morally bad, using this as justification for why AI might choose to eradicate humanity. They judge humanity as cruel or worthless, which is common in eschatological stories.

Signs and Omens: Every new AI development or research paper is interpreted as evidence of impending doom, often misrepresenting scientific findings. These misrepresentations are then meme-ified for transmissibility and groupthink.

Chosen Few: The AI safety community sees itself as uniquely capable of foreseeing and potentially averting the coming AI apocalypse. They reject scientific consensus, asserting they alone have the correct model to predict the future.

Prophetic Figures/Sources: Certain AI “researchers” and authors are treated as prophets, with their non-peer-reviewed writings considered sacrosanct. Many of these figures have never written a line of code, been in a data center, or trained an AI model.

Apocalyptic Imagery: They leverage vivid apocalyptic scenarios from popular culture, like Skynet from the Terminator series, to make their predictions feel more real and threatening.

Inevitability and Powerlessness: Many in the community express the belief that humanity is doomed regardless of our actions, creating a sense of fatalism. It is a foregone conclusion that humanity is doomed.

Ambiguity and Adaptability: The narrative is based on numerous hypotheses and conjectures, making it difficult to definitively refute and allowing it to adapt to criticisms.

Well said. Can’t argue with this. I will point out that with ElevenLabs new text reader you can listen to this with the “Mad Scientist” for added value.

Well written, and I mostly agree. I don't fear AI, I fear people who use AI to do evil. I fear monopolists and governments. I support open source and widespread sharing of knowledge. If only one country or company has AI, it becomes a weapon. If it's available to all, it's a tool