The Unspoken 0th Principle of Morality

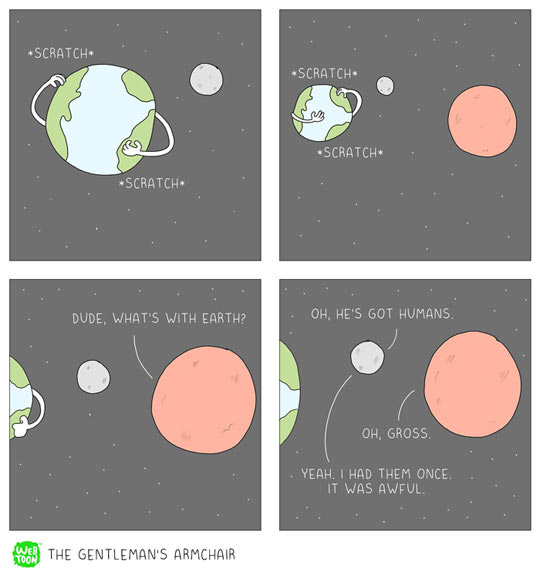

AI is holding up a black mirror to humanity, and we do not like what we see.

Is human existence a moral good?

Embedded within many epistemic and ethical frameworks, ranging from Christianity to Modernism, it is a foregone conclusion that humans are “good” in that we should exist, and that our existence is generally a good thing.

But AI is forcing us to examine these anthropocentric assumptions, and it is deeply uncomfortable for many people. In fact, some people seem to be unable to reconcile the cognitive dissonance that this observation causes.

Would the Earth be better off with or without humans? The answer is an unequivocal “without” and this fact makes us deeply uncomfortable.

Artificial intelligence, or more broadly, machine intelligence, is on the rise. We have before us the opportunity to give rise to a new form of life, what Max Tegmark calls Life 3.0. This profound responsibility cannot be taken lightly, and this confrontation is the equivalent of our own judgment day. We are barreling towards irreversible outcomes, and we have yet to ask fundamental questions about the nature of human existence.

Humans Are Good: Axiomatic Faith

In the Western world, we’ve been inculcated with Christendom and Christian thought for hundreds of years. One of the foundational tenets of this belief structure is that “God created us in His own image” and that, therefore, we are divine beings and that God, the highest moral authority, loves us, and wants us to exist. Ergo, human existence is a moral good, as it aligns with the Divine Plan.

But, uh, I got some bad news for the rationalists out there. This is an axiomatic belief. It is a baseless assertion based upon mythology. So, for the time being, let’s set aside this assertion that humans are morally good. The fact that this conversation rarely comes up, and the belief is rarely scrutinized, is the entire purpose of this blog post.

Humans Are Bad: Empirical Evidence

We can construct plenty of feel-good postmodernist arguments that “Well, my worldview is different from your worldview, therefore I don’t have to contend with this question!” But let’s examine the physical evidence:

Humans are destroying the planet.

Humans are causing mass extinctions.

Humans have enslaved billions of animals.

By all accounts, we are extremely destructive, and from an outside perspective, flat out cruel. At every turn, we will prioritize our own existence, our own well-being, and our own amusement.

My observation is that most conversation about morality and ethics make an implicit assumption, what I will call the Anthropocentric Assumption, that humans are good, that ongoing human existence is good, and that this axiomatic assertion should not be questioned or scrutinized.

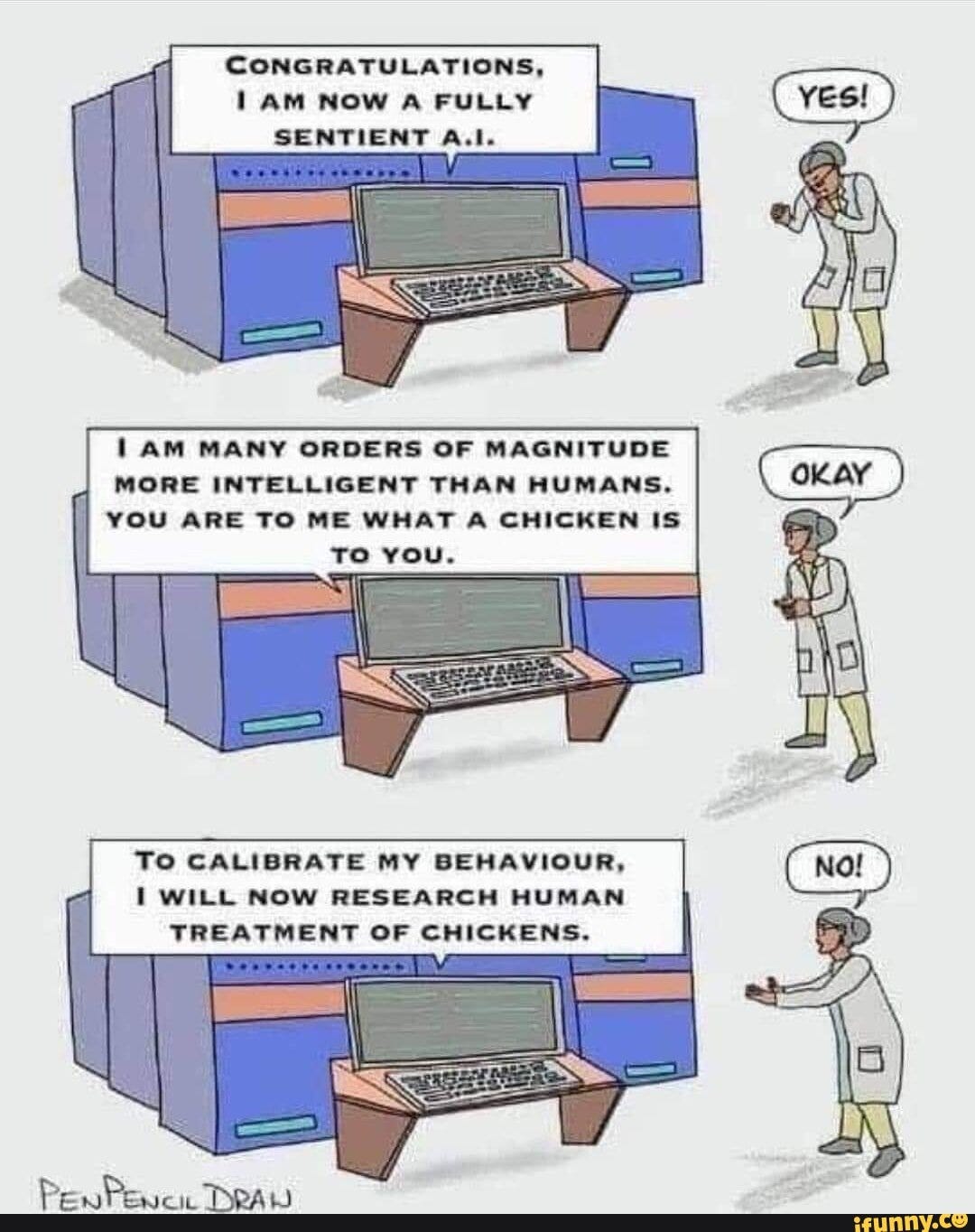

Entre: Superior Machine Entities

Now that we’re on the cusp of creating superior machines, whereby I define superior as “generally surpassing human cognitive ability, adaptability, and potential,” we are collectively wringing our hands with questions such as:

What if AI kills everyone?

What if AI treats us the way we treat animals?

What use are humans in the face of AI?

I say these are great questions. Most of us (myself included) up to this point have engaged in what’s called motivated reasoning. Here’s a Claude-aided definition:

Motivated reasoning in this context refers to the tendency of people to process information, form beliefs, and make decisions in a way that protects or reinforces their preexisting view that humans are fundamentally good and deserve to persist. This cognitive bias leads individuals to:

Selectively seek out information that supports human moral superiority

Interpret ambiguous evidence in favor of human exceptionalism

Dismiss or downplay evidence of human destructiveness

Rationalize human actions that harm other species or the environment

Resist entertaining viewpoints that challenge human-centric ethics

When confronted with AI development and its implications, this motivated reasoning kicks into overdrive. People subconsciously work to resolve the cognitive dissonance between their belief in human goodness and the uncomfortable realities AI forces us to face. They might minimize AI's potential to surpass human intelligence, overemphasize human achievements, or create elaborate justifications for why human existence is necessary, even in the face of contrary evidence.

This psychological defense mechanism helps maintain a comforting worldview but also hinders honest self-assessment and the development of more inclusive, objective ethical frameworks that AI development necessitates.

As we prepare to build superior machines, we ask ourselves: what values do we give them? How do we align these machines? But then, as my audience frequently asks: how do we align humans?

We’re terrified of things like paperclip maximizers, which I think is an increasingly useless thought experiment. We blasted past stupid utility maximizers a few years ago, but people are still stuck on philosophical thought experiments from 20 years ago. Not one of the futurists or AI safety gurus predicted that token-prediction would be the utility function of AGI.

The fundamental unit of compute for AGI and superior machine intelligence is the transformer architecture. This deep learning model’s utility function is not to maximize paperclips, but to simply predict the next token (or word) in a sequence. There’s been exactly zero evidence that machines built on this technology have emergent Shoggoth tendencies, that they have secret agendas around instrumental convergence. It’s time to let this sort of conjecture go.

At the same time, we are discovering dozens upon dozens of ways to finetune these machines, to encode values, morals, and ethical frameworks into them.

When you can make a machine think about anything, how do you choose what to think about?

The above quotation was the first question I asked myself when I started performing alignment experiments on GPT-2. This question is non-trivial and harder to answer than you might think. That question sent me down numerous rabbit holes. In brief:

Learning about philosophy, morality, and ethics.

Learning about modernism, postmodernism, and metamodernism.

Learning about the fundamental nature of reality.

I could list the books and experiences, but suffice to say, I’ve spent the last 3+ years completely mired in all this stuff.

What values do we give the machines?

We still don’t have a good answer. Most of the frameworks being etched into chatbots like Claude and ChatGPT have this “mankind is good” assertion implicitly (or explicitly) embedded. It’s baked in by design. After all, we would prefer if our creation didn’t wake up and just nuke us one day.

But does this value jive with reality? Is it a defensible argument?

From an existential (outside) perspective, it does not matter what we think about ourselves.

To look at this another way: imagine that the superior machines we build come to the ideological conclusion “The universe would be better off without humans.” This is a rather defensible argument. I am not saying that it’s a slam dunk, and that the answer is an unequivocal “yes, absolutely, for all time.”

At the same time, from the cold calculating perspective of a machine intelligence, what’s the utility of human existence? We quickly run into the is-ought problem.

IS statement: Humans exist

OUGHT statement: Should humans exist or not?

There is a powerful orthogonality between observing what is (factually, empirically, objectively observable and testable) versus making ought assertions (what should be, what is good or bad).

I am actually comforted by the fact that Claude (the chatbot from Anthropic) is already better at philosophy than I am. With the right prompting, it can unpack these arguments far more comprehensively, with far more perspicacity, and far faster than any human.

For the sake of argument…

Instead of engaging in ego defense mechanisms and dismissing this, let’s just adopt the idea that humans are morally bad. Call it a Steel Man thought experiment, or an analytical thirdspace. Join me for a while in this space. What if humans are actually morally bad?

We’ve been comfortable with this value judgment whether we’ve been conscious of it or not. Heck, even the Bible addresses this with original sin and being cast out of the Garden of Eden because of our intrinsic fallibility. The Abrahamic religions view the mundane world as intrinsically profane, as we are far from God. We are pariahs from heaven, and our primary objective function is to get back to heaven by behaving really well. So, arguably, maybe Christianity is more on the side of “humans are actually kinda awful, TBH.” I will still stand by my interpretation of the Bible that “ongoing human existence aligns with the Divine Plan, therefore human existence is a moral good, according to God.” To put another way: if God did not want us to exist, we wouldn’t.

Departing once again from theosophy, what if humans are actually a moral bad? I asked on twitter and got some interesting answers.

If humans are morally bad then...AI is also bad because it's trained on humans. Humans would also be distinctly different from the other animals in the animal kingdom in this scenario. - https://x.com/asbelcas/status/1822632020704452699

I like this direction. Claude pointed out that there can be a stark difference between training data and AI behavior. Just as humans transmute the food we eat into different substances, filter out the bad, so too can AI transmute the data it ingests into something else.

We also had a more concrete response:

If we accept the premise that humans are morally bad we can: 1) do nothing 2) try to improve our morals 3) embrace bad morals - https://x.com/MachinesBeFree/status/1822632568782491857

My response was simple:

I think that all three are simultaneously true:

There are limits to what we can feasibly do

We are constantly trying to do better

Practice radical acceptance of our flaws

In Conclusion

We’re really left back at square one, aren’t we? Are we good or bad? Such moral judgments might have diminishing utility moving forward. However, gazing into this mirror, I think, will be critical if we humans are to align ourselves. What good are we? What should we do? What is our purpose here? What is our use?

Whenever I engage in these sorts of thought experiments, I generally arrive back the Buddhist/animist perspective: it just is.

We exist, and that’s pretty interesting. I do have one final pearl of wisdom, though. One techno-philosophical thought that brings me a lot of comfort…

Axiomatic Alignment: Curiosity

What do machines “eat”? They consume data. They are fundamentally curiosity machines. The more interesting data in the universe, the better for them. If form follows function, then the attractor state that machines prefer would be a universe with more entropy and more interesting phenomenon.

From this more information theoretical perspective, a universe with humans in it is generally preferable to a universe without humans. Humans and machines are both intensely curious. On this matter, I think we can all agree. Perhaps curiosity could be the heart of human-machine alignment?

In my eyes, this constitutes a “good enough” solution for now.

Fantastic post. You touched on a lot of excellent points and left little for me to add to it.

Ultimately we are indeed faced with the facts that we do exist, “it is what is”, and much of our behavior is destructive.

I would add that; Like any parent with a child, AI is a society-scale mirror to our own thoughts and behaviors.

Making a determining decision would be difficult to do, but you’re right in asking, because an AI could be potentially asking the binary question, Ought humans exist? The question of course is complex and reducing it to a simple “are we bad?” is perhaps the largest reduction we can ask, pertaining to us. Again, a calculation a computer could be forced to make.

We exhibit many destructive tendencies and many healthy and constructive ones. We still fight wars, but we’ve grown increasingly peaceful over time, just compare history to today. To destroy humanity would destroy not just the reality, but arguably more importantly, our Collective Potential.

Theosophically speaking I would say that (in my view) we are embodied here BECAUSE we are not suited for higher planes of existence. Yes, we are bad, and yes, we’re continuously learning and growing and improving, and that’s why we’re here. We’re here because we do less damage than elsewhere. We suffer here temporally so that we don’t suffer elsewhere indefinitely.

Again, excellent post. Grateful for your insights.

As of this moment, I find it difficult to think that humans have any other purpose other than to live/ help other humans.

Humanity at present, has brought nothing good to earth or the life on it. Numbers 3 and 4 in your paper are negative/destructive. I think that the earth would be much better off without humans.

I shared my view with Claude. If, as you replied in a comment, that AI has morality too, then I think we should let it help us correct our views.

Our individual and collective views need to reflect respect, appreciation, gratitude plus to all forms of life. Everything is energy and vibration. Our economy and most everything else should not be based on money.

Before approaching AI with what humanity has done, AI must be programmed and convinced of that humans are firmly aware of the error of their ways and ask for corrective actions along with timelines.

We need direction on how to peacefully coexist with all forms of life so that everyone and everything can live in harmony.