The "I just realized how huge AI is!" Survival Kit

If you're reading this, you might have recently had a wakeup call and now you're scrambling to wrap your head around it. Let me assist.

The “Ontological Shock” (Wakeup Call)

Pretty much everyone in the AI space, specifically the generative AI space, has gone through The Wakeup Call. Sometimes it’s been a Rude Awakening and in other cases it’s been more of a sudden moment of revelation. But first, let us define “ontological shock” which is really just a fancy way of saying reality grabbed you by the eyeballs and said PAY ATTENTION!

Ontological Shock: A profound disruption of one's fundamental understanding of reality, causing a cascading reassessment of basic assumptions about what is real, possible, or “natural” in the world.

In the context of AI, ontological shock occurs when someone encounters an artificial intelligence that fundamentally challenges their pre-existing mental models about consciousness, intelligence, and the uniqueness of human cognition. This experience can be particularly acute because it forces us to confront questions we may have previously considered purely theoretical or fictional.

Let me give you a few examples from people I know.

One friend of mine runs a dissertation coaching academy. She helps grad students write their theses, and, as generative AI took off, her mind immediately began ringing alarm bells. This is a threat to my business and my way of life! So she begin digging into everything she could find about generative AI—how it works, how to use it, what its limitations are, the whole nine yards.

What’s interesting about this anecdote is that she had a powerful lens through which to view the rise of AI: writing. Because of her background, she immediately recognized both the utility and the threat that AI posed. This duality is one of the things that many people struggle with—isn’t this the glorious intelligence revolution that we’ve been promised for decades? At the same time, it represents an Unknown Quantity that threatens to upset the apple cart, and many people have ended up with a “devil you know” attitude.

Now, one of the first things that happens once someone has this moment of revelation is what’s called memory reconsolidation or what my wife and I call “a reindexing event.”

Memory Reconsolidation: The brain’s automatic process of rewriting or recontextualizing existing memories and understanding in light of paradigm-shifting new information. Think of it as your mind performing a massive “database update” across your entire web of beliefs and experiences.

This reconsolidation process can be particularly disorienting because it happens largely outside our conscious control. Someone might suddenly find themselves reinterpreting past conversations with chatbots, or questioning previous assumptions about machine capabilities with a new lens of understanding.

Other examples of wakeup calls that I’ve seen in the wild:

Lawyers realizing that tools like Claude are almost as good at recognizing legal patterns and interpreting the law as them (and certainly better than most paralegals).

Doctors realizing that ChatGPT is better at diagnosis than most physicians by a significant margin, and better at explaining things to patients by a wide margin.

Developers realizing that these tools are better coders than they were for the first several years of their career, and they couldn’t compete today if they started over.

So, if you’re here, what brought you here? What was the sudden revelation or slow realization that led to your sudden paradigm-shift? What made you wake up, sit up, and take notice?

Cognitive Immune System Kicks In

Generally what happens next, and you might have already gone through this, is what’s called reactive defenses, which generally manifests as a strong emotional response, usually something like an “ick factor” or perhaps even anger.

This is, in my estimation, fundamentally a type of cognitive dissonance. There’s your existing worldview clashing with new evidence, and so your brain kicks into overdrive trying to interpret this new reality through your existing faculties.

But moreover, you have learned to trust your existing mental frameworks of reality, and when something doesn’t jive with them, well there’s a good chance that the new information is just wrong. This is perfectly natural and healthy, and represents a well-developed sense of reality. Your ontological anchoring is intact.

Think of it this way: someone you don’t really know comes and tells you “Everything you think you know about reality is wrong!” It’s like when Morpheus shows Neo the “real world.” That is ontological shock turned up to 11!

How does Neo react? He freaks out. His first encounter with the truth is utter rejection.

Speaking of rejection, let’s examine some of the tools in your cognitive immune system’s toolbox (hint, it’s almost identical to the stages of grief!)

Ontological Shock (Shock): This is too overwhelming and I can’t even contemplate the ramifications, so I’m just going to pretend like I didn’t hear or see that.

Flat Out Rejection (Denial): One of the easiest ways to deal with inconvenient or difficult new information is to just deny its existence. “Fake news.” Misinformation, lying from grifters, scam artists, or “it’s all just hype.” This is an instinct to achieve what’s called “cognitive closure.”

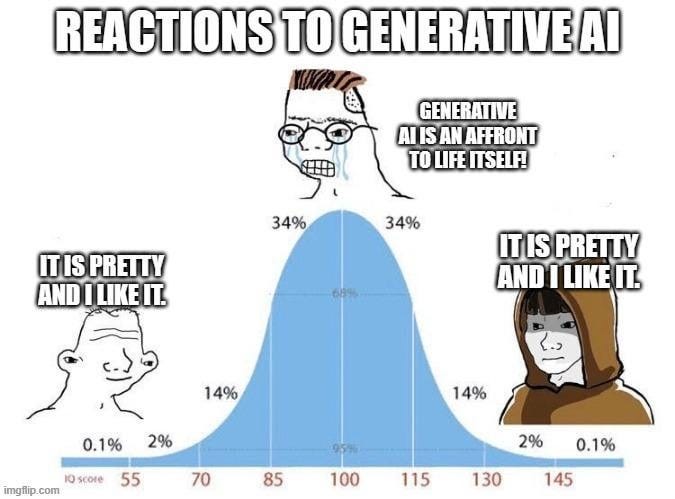

Lashing Out (Anger): This one still bewilders me as the messenger. People fly off the handle all the time, “Dave, you’re delusional if you think AGI is around the corner!” A common ego defense activation basically says “you’re wrong and bad and therefore I don’t have to deal with whatever you’re saying!” People often let this go because of social normalization i.e. people stop justifying their anger. Some people get stuck here.

Rationalization (Bargaining): This is where people say “it’s just a stochastic parrot” and “it’s just mimicking human speech” and “it just seems to be intelligent.” This tactic attempts to downplay and reconcile the new truth simultaneously. It eventually falls apart when the current “it’s just” rationalization fails as AI inexorably improves month after month.

Existential Dread (Depression): This phase is the “darkest hour” for many. They descend into the pit of despair. “AI will certainly kill everyone” is the most extreme reaction. “AI will destroy the economy and my job and I won’t be able to take care of myself and my family!” is another common reaction. “The elites will just control it and we’ll end up in a cyberpunk dystopian hell!”

Glimmers of Possibility (Acceptance and Hope): This phase often begins if/when people get out of the miasma of despair. Generally it takes a while. You’ll see news that AI has limitations, that alignment works, and that it’s not going to just suddenly wake up and take over the world. You might even start to feel excitement for the future!

Getting back on the horse (Processing and Integration): This is the final phase, whereby someone has faced the new reality, processed the emotional shock, and integrated this new truth into their identity and their worldview. They pragmatic problems and solutions, rather than monumental crisis or damp squibs.

Where you’re at

If you’re reading this, I’m guessing you’re probably at stage 4, 5, or 6. People at stage 1 won’t even engage in discussions about AI. It’s sort of like how some people dealt with the Great Lockdown—there is no war in Ba Sing Se. (This is an Avatar: The Last Airbender reference) Basically, at stage 1, people use the ostrich tactic and just try to ignore it.

There’s a slim chance that you might be at stage 2. As a communicator, a lot of people want to “shoot the messenger” (me) which is stage 3, but even so, some folks at stage 2 will be looking for evidence that there is no there-there, that AI is a “big nothing-burger” and therefore they don’t have to deal with reality. Yann LeCun, the famous AI scientist of Meta, seems to be stuck somewhere between denial and rationalization. Gary Marcus, the guy who’s constantly crowing about how he “won AI” lately, is clearly stuck in stage 3. However, I don’t think you’re in stage 2 because of that “cognitive closure” phenomenon. Cognitive closure can manifest in both stage 2 and stage 4, but slightly differently.

Cognitive Closure: The urgent desire to arrive at any firm answer, rather than sustain the psychological discomfort of ambiguity or uncertainty. This often manifests as latching onto the first plausible explanation that allows one to stop thinking about a challenging topic.

Now, if you’re a hater, you might just be in stage 3 and looking for ragebait. I have more than my fair share of followers who seem to consume all my content for the sole purpose of raising their own blood pressure. This behavior seems categorically irrational to me, but it’s a common enough phenomenon. There’s actually a clinical term for this: “digital self-harm” or more colloquially “rage farming.”

Rage Farming: The deliberate consumption of content known to provoke anger or outrage, often leading to a kind of emotional addiction driven by the dopamine and adrenaline response to perceived threats or injustice.

This behavior connects deeply to what psychologists call “negative emotional reinforcement.” The process works through several interlocking mechanisms: The initial anger response triggers a flood of stress hormones and neurotransmitters, creating a physiologically aroused state. This state can become addictive because it provides a sense of righteous certainty and moral clarity. The anger response also often serves as a defense mechanism against deeper, more vulnerable emotions like fear, uncertainty, or powerlessness.

What’s most interesting is looking at anger through the lens of evolution: anger is protective against injustice. It is a sign that you have been wronged or harmed.

Now, if you’re reading this and finding your blood pressure rising, and the impulse to write a nasty comment, please don’t. I don’t want to harm you. If you lob a nasty comment at me, you’ll just get banned and your comments will get deleted. I’m not here to piss you off.

So, most likely, you’re probably in stage 4, 5, or 6. Let’s examine some of the differences between the dismissal in stage 2 and 4 first.

In Stage 2 (Denial), cognitive closure takes the form of what we might call “preemptive dismissal”—actively seeking reasons, however flimsy, to categorize AI advances as irrelevant or fraudulent. This is fundamentally different from honest skepticism because the goal isn’t truth-seeking but rather psychological comfort.

In Stage 4 (Bargaining/Rationalization), we see what I’d call “sophisticated denial”—using intellectual frameworks to create distance from the implications. This often manifests as what philosophers call deflationary arguments—complex reasoning that aims to minimize or trivialize significant developments.

For those at Stage 4: Rationalization

If you find yourself resonating with this state, where you’re engaged in good faith, and not just trying to dismiss AI out of hand, but find yourself making “its only just” comparisons, you’re likely solidly in stage 4.

This is a good sign! You’re trying to integrate this new technology into your epistemic scaffolding. Your brain is paying attention to cognitive dissonance, and rather than reacting overtly negatively, you are actively trying to make sense of it.

There are a few key strategies you can use to finish moving through this phase, and they may or may not be comfortable depending on your pedagogical sophistication.

Try to prove yourself wrong. For the truly curious, just ask yourself simple questions like “What if I’m wrong here?” Engage in genuine perspective-taking or “steel manning” the arguments. What if AI truly is smarter than humans? So what? How would you measure that? What if AI truly could become sentient? So what? What does that mean?

Just learn about it. Use the AI, talk to people who use AI, read all about it. Through learning, you’ll have some of your ideas validated, and some of them challenged. But, most importantly, you’ll learn it’s not as big and scary as you thought it was. In hindsight, you might think your initial reactions were a bit silly.

Now, you might be looking at the list and thinking “Dave, I would rather stay skeptically engaged than move to stage 5, existential dread.”

Well, wish in one hand…

The Quagmire of Depression (Stage 5)

Lots of folks get stuck here. Doomers like Eliezer Yudkowsky, Roman Yampolskiy, and Connor Leahy are prime examples. They have so much terror (and social status) because of their convictions that “AI will inevitably kill everyone” that they are vomiting their fear onto the rest of the world. Now, I personally have much empathy for people stuck in the pit of existential dread. What I don’t respect is people who want to drag everyone else down with them.

As previously discussed, there are a few ways that this dread can manifest:

Extinction Terror: The idea that AI must inevitably kill everyone is an easy thing to latch onto because of the availability heuristic. You’ve seen Terminator, The Matrix, and Age of Ultron so it’s very easy for you to imagine a scenario like these coming true. This is a cognitive bias.

Loss of Economic Agency: Let’s say you either work through the extinction fear or that just doesn’t resonate with you. However, you’ve now gotten to a point where you accept that AI is on the rise, you’ve looked at the data, and you realize this train ain’t stopping. Well… what the hell does that mean for my job? People don’t really want to work, but what they do want is financial security or economic agency—the ability to take care of themselves and their family. If you concede that AI is on a meteoric trajectory, then it’s only a matter of time before it overtakes your skills and competences. And then what? You feel like you’re up the creek without a paddle! That’s what!

Cyberpunk Dystopia: Even if you make it through the first two types of despair, there’s always good old corporate avarice and government complicity! Yessirree guys and gals, never underestimate the power of the greed-corruption duopoly! In point of fact, there are a lot of reasons to believe we’re caught in a dystopian attractor state already! So even if AI doesn’t kill everyone and even if we can manage to distribute the benefits to society, we the little people will just have to tolerate subsistence on UBI, and the elites will just drink their champagne on Mars with Elon Musk.

If you’re stuck in the pit of extinction anxiety, what I would say first is dramatically change your information diet. Stop listening to Liron Shapira (no relation, thank god) on Doom Debates or the For Humanity Podcast (which I made the grievous mistake of guesting on). You really need to recognize that you are biologically hardwired to view negative threats as far more significant than positive opportunities. Your poor little monkey brain simply did not evolve to really grasp global catastrophic threats, and AI need only be a plausible threat to activate your amygdala. Next, you can start to recognize the pattern; these men, these “Doomers” are all textbook examples of doomsday prophets. I wrote about that quite extensively here:

The Shills and Charlatans of AI Safety

Their core arguments rest on several incoherent premises. They simultaneously claim that AI systems will be both incomprehensibly alien in their cognition yet somehow predictably malevolent in human-relevant ways. They assert that we cannot understand superintelligent AI systems, yet claim to predict with high confidence how these systems will behave.

The epistemology becomes particularly circular when examining their responses to counter-evidence. When AI systems don't exhibit the predicted dangerous behaviors, this is taken as evidence that they are deceptively aligned—essentially creating an unfalsifiable hypothesis that treats both confirmation and disconfirmation as supporting evidence.

In short, beware the snakeoil salesman. They want to sell you books. They want to remain famous and relevant.

Now, if you’re worried about the loss of economic agency, then that’s a more sophisticated conversation! To engage with this, let’s start with some cognitive reframing.

Economic Agency: The fundamental human need for reliable access to resources and the ability to shape one’s material conditions. This is distinct from employment itself, which is merely one historical mechanism for achieving agency.

The key insight here is the distinction between the mechanism (jobs) and the underlying need (agency). This recalls Marshall McLuhan’s observation that we often mistake the medium for the message. Just as previous generations mistook horses for transportation itself, we’ve conflated jobs with economic security.

Now, I’ve written quite extensively on Post-Labor Economics, but the TLDR is this:

The establishment believes in a “consumption based economy” meaning that the government and billionaires and economists the world over all believe that economic growth and prosperity is predicated upon consumer demand, which requires that everyone have money to spend (economic agency).

Therefore, if machines come through and nuke all the jobs because they are better, faster, cheaper, and safer, this would lead to what I call the “economic agency paradox.” Basically, the automations take all the jobs, paradoxically lowering the prices of all goods and services will simultaneously removing any ability to pay for said goods and services. It’s a deflationary vicious cycle.

But, since the elites and the powers that be believe in a consumer-driven economy, they have good motivation to find new solutions, starting with stuff like UBI, but perhaps a more investment-based future economic paradigm. Furthermore, it’s entirely possible that human-centric jobs will rise dramatically. We will work together to find a solution. And anyways, if AI creates a future of abundance, it will be much easier to get your needs met.

But seriously, just browse my Substack articles tagged with Post-Labor Economics: https://daveshap.substack.com/t/post-labor-economics

Turning the Corner: Stage 6 and 7

Once you process the ontological shock, the grief, and do the inner work of overcoming institutional codependence, you’ll start to feel more optimistic. Hopeful about the future. The AI revolution will be interesting and exciting, and it’s a privilege to live through this dynamic time!

You’re no longer in active denial or dismissal of AI and its potential, nor are you angry about the possibilities. You’ve done a lot of intellectual work to integrate the reality of AI into your epistemic frameworks, and you’ve looked at the data, evidence, and discourse and you have a pretty good feeling that you know where things stand. But it’s not over yet.

One of the most startling things I’ve noticed is that, even people who have worked through everything up until now are still getting stuck in “normalcy bias.” I’ve been working with generative AI since 2019 and I’ve seen several paradigm shifts already in terms of what AI is capable of. My mantra is “just wait six months.” Whatever AI can’t do today, just wait six months and then reassess.

Here’s an example of normalcy bias still hampering people who have moved through the various stages of grief: I was talking with an angel investor who is focused on AI and blockchain. He was asking me about the role of AI and humans in DAOs (decentralized autonomous organizations) and I noticed something rather peculiar; he kept trying to shoehorn humans into the roles. He kept assuming that humans are still going to be doing all the work in the future. I noticed a collision, some serious cognitive dissonance. He’d gotten locked into the view that AI agents won’t improve much from where they are today, even though they haven’t even gone commercial yet.

Normalcy Bias in AI Progress: The tendency to unconsciously treat the current capabilities of AI as a stable endpoint rather than a momentary snapshot in an exponential progression. This manifests as people attempting to plan for a future where AI capabilities remain roughly where they are today, even when they intellectually understand that dramatic improvements are likely. Even after moving through all stages of ontological shock and accepting the transformative nature of AI, many still unconsciously anchor their thinking and planning around present-day capabilities, forgetting that AI represents an inexorable progression that will continue to produce paradigm shifts for the foreseeable future. This cognitive bias often appears as attempts to shoehorn humans into roles and systems that may be entirely automated or transformed by the time they come to fruition.

Parting Thoughts

All problems are solvable. It would be silly and disingenuous to assume that one knows how things are going to proceed with any certainty. No, I cannot “prove” that it will go well, nor can anyone else “prove” it will go poorly. Many artists whose craft and livelihood are stuck at stage 3: anger. You can find communities on Reddit, Twitter, and Bluesky dedicated to the idea that “AI must be stopped!” and “AI is bad!”

Doomers like Yudkowsky are trapped in Stage 5. Gary Marcus seems to be stuck in Stage 4. A big reason that I had to step away from my communities is because many members of my audience are stuck somewhere between stage 2 and 5, from skeptics to angry lashing out, to existential despair and rationalization. And I personally didn’t have much patience for it. But now that I have a framework for guiding people along this journey, I might consider revisiting it.

![The Matrix - Welcome To The Desert Of The Real [HD] The Matrix - Welcome To The Desert Of The Real [HD]](https://substackcdn.com/image/fetch/$s_!snkS!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F6c5df635-70d4-4e7c-8464-0ccad53b21cf_686x386.jpeg)

I'm keeping this Substack URL to send to those suffering from AI-induced psychological stagnation. Copy/paste. There I fixed it.

Another seminal submission. Much of humanity needs shepherding across to the other side. You've just submitted another key framework, well-conceived, helpful/useful -- again. Thanks David!

You had me at “Now, if you’re worried about the loss of economic agency, then that’s a more sophisticated conversation“. Very few in the world of economics are engaging this issue - even if only war gaming it - and I think they are mostly at stage 1.

Excellent framing thank you. Helpful