Books don't stack up to AI anymore

I'm reading fewer books, but more words.

Have you ever gone on a book binge? You know the indulgence; when you have some specific interest or problem you want to solve, and you hit up Amazon or Thriftbooks and load up your cart, ready to drink in that sweet firehose of knowledge.

At first, I just used AI to help me find books, as you can just ramble at your favorite chatbot about whatever’s tickling your brain or heart at the moment and ask for a reading list. Heck, that was my wife’s thesis—using GPT-3 as a stand-in for a librarian.

After all, AI is trained on the entire internet and a ton of pirated books, so not only should it be a good reference librarian, it can probably tell you exactly what book you need!

Then you checkout and wait patiently for your books to arrive. If you’re lucky, your books are already at your local Amazon warehouse and will be there the next day. Sometimes, though, you have to wait for snailmail to send your books from BFE Wisconsin.

Either way, the day arrives, and your books are in that green Thriftbooks slip or the white Amazon bubble wrap. The excitement builds, as you tear through the packaging. Sometimes I even forget which books I ordered specifically. The first thing we do at my house is share: hey honey look at the book that just arrived!

As a librarian, my wife looks at the imprint, the copyright, and all that other librarian-y stuff to understand who wrote this book, when, why, and so on.

But this year… I haven’t been going through this ritual as much. My last tranche of books was a stack of 6 books orbiting around the theme of money. A spiritual view of money and value, repairing your relationship with money. You know, the kind of thing that was super popular in the 90s.

One by one, I sat down to cozy up and read through these books, and one by one, I set them aside, eventually shuffling them to our “to be donated” pile.

I mentioned this phenomenon briefly on Twitter and LinkedIn and only got some mild engagement. At the time, it was more like “huh, I just realized that I rely on AI way more than books…” and sort of left it at that.

But now that a few weeks have gone by, I realize “no, this is actually huge.” I don’t know if this phenomenon extends beyond just me yet, but here goes. Here’s why I think I’m reading fewer books, and what this could mean about AI and the future of our epistemic landscape:

Signal vs noise

For ages, we’ve bemoaned shortening attention spans—first radio, then TV, then video games, and now algorithmic shorts. I asked my niece last year “what do you watch on YouTube?” and she sort of froze up. “Uhhh…. Idk…” I asked her what channels she subscribes to. None.

What. The. Actual. Heck.

“So you don’t put any effort into curating your feed?”

Nope.

Kids these days just rawdog the algorithm. We’re all doomed.

But in all seriousness, as I get older, I realize that time is quite precious. I want to ensure that I’m using all my time wisely. It’s why I have a famously strict moderation policy—I do not tolerate online behavior that I would not tolerate IRL. My time, my cognition, and my emotions are mine to control and expend as I see fit.

What does this have to do with books?

Well, once you’ve read enough books, watched enough video essays, and read enough blogs, you start to notice patterns. There’s nothing new under the sun. The same tropes, ideas, and hooks get recycled ad infinitum. Eventually, you want signal, which in this case I mean “novelty plus value.” Hell, isn’t that what Substack is all about?

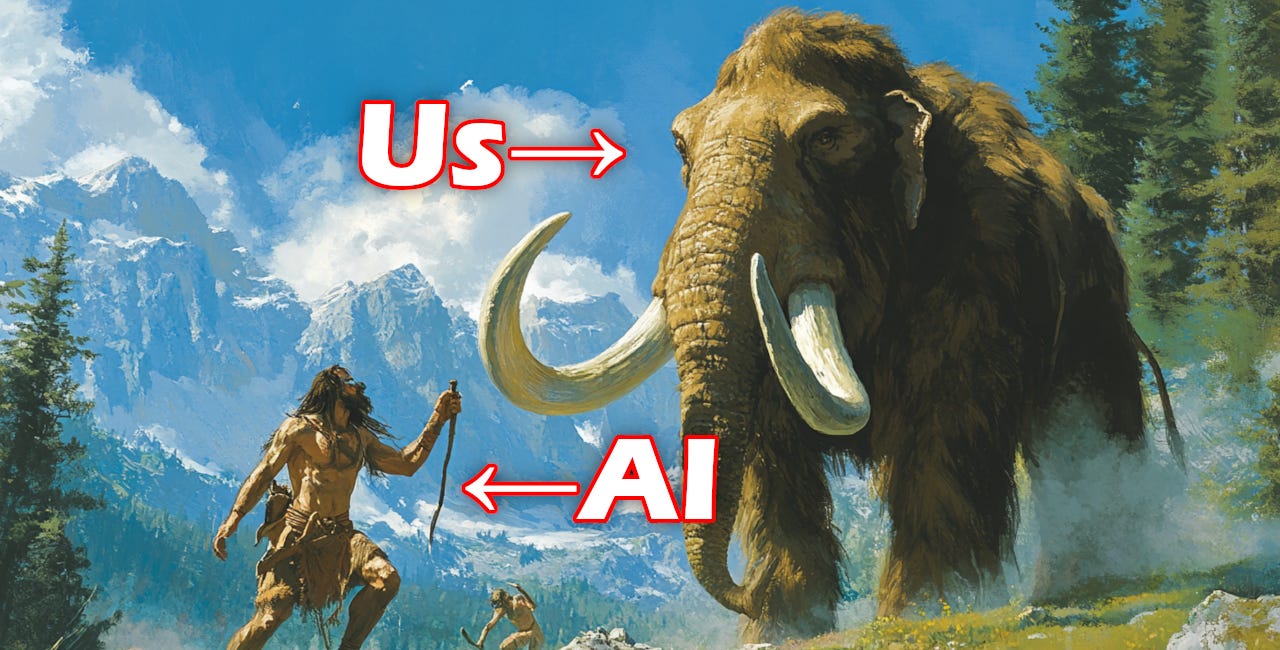

With my last stack of books, I realized they were all just noise. Low insight, low novelty. Whatever wisdom they’d offered the world when they were written, it has been fully metabolized and integrated into the global noosphere. I might as well just ask AI about my problem specifically. Why? The AI has already read all the books, plus all the commentary, meta-commentary, reactions, and analysis. Plus, the AI is interactive.

AI is “just compression”?

This reminds me of something people said when GPT-3 and ChatGPT were new. They were trying to understand how to mentally grasp “what the heck is generative AI?” And one deeply flawed metaphor they came up with was “it’s just a compression algorithm.” I mean, technically, sure. You compression many terabytes of data into a model that is a few gigabytes. So, yeah, in raw mathematical terms, it’s not entirely incorrect to say that a neural network is a kind of compression.

But that’s also like saying a house is just a refactored tree. The affordances of the new structure create entirely new possibilities. The same is true of AI as a source of information. It’s not just “Wikipedia compressed” or “Google compressed”—it’s clearly more than that.

At the same time, I can’t argue with the idea that AI has compressed millions of books into accessible, bite-sized pieces. If you want to understand just one particular thing, or have one particular problem, with just a short conversation you can generally move on. Instantly. No foraging through books. No hours spent reading.

I ran this idea by my wife and she agreed, yes, absolutely AI can be faster and more efficient than books (keep in mind she’s a librarian so she’s heavily biased towards books!)

The exceptions

Now, this isn’t to say that “books are completely dead” and there’s a bunch of reasons for it.

First, some people read out of habit and as a hobby. My wife is presently reading about Catarina Sforza, the Tigress of Forli. Sure, you can get the facts of her life from an AI pretty quickly, but there’s just something different about settling in to read about this woman (who was, by all accounts, extremely extra, to the point they had to tone her down for all the adaptations they’ve made of her, like in Borgia). My wife literally just enjoys reading—something I lost many years ago when I started noticing patterns and trends and recycled tropes. My brain is just too novelty-seeking. Reading must be productive, dammit!

To extend that though, another reason that books aren’t gone just yet is that it’s still one of the best ways to learn a whole new framework. I’m working on one such book right now, THE GREAT DECOUPLING which is a pedagogical framework as much as it is a solution or economic treatise. Many people have asked “why not just make it something I can talk to an AI about”—well because that’s just lazy. If you’re not willing to spend a few hours with a topic in depth, then you probably don’t really want to know it in the first place, right?

Lastly, I’ll say that the social aspect of reading will probably never be dislocated by AI. “Hey, did you read the last ACOTAR?” My wife and her friends are always going on and on about the latest fads and trends. Shared media is shared culture. It’s like when Apple TV and Netflix and Amazon and Hulu all fragmented. I couldn’t ever talk about Ted Lasso with my friend because I didn’t have Apple TV. Now that I do, I’m a few years too late. This “media atomization” is a huge problem for society—we don’t even have the same binding myths to share anymore, except for summer blockbusters or cultural phenomena.

I’m not going to wring my hands and bellyache about the inevitable collapse of society due to AI. I just thought this was an interesting observation in epistemics. Why read books myself when my chatbots can read them for me, and then act as a personal information concierge? I’ve been using this stuff since GPT-2, as well as teaching it, so I’m an expert prompter and an autodidact.

But not everyone is like that.

As with any information source, skill and knowledge have a big impact. One worry I have is that AI will merely amplify biases that people already possess. In the same way that you can fall victim to your own “search bubble” in Google, likewise, if your AI doesn’t ever challenge you, expand your horizons, or offer something new, you might not think to ask. Even with expert prompting, it takes deliberate effort to pull on those threads of novelty, and to deliberately learn something new.

Sure, many chatbots now have a “learning mode” but they haven’t made headlines.

If you want a little bit more, take a look at one of my top blogs of all time:

AI is already smarter than you, you're just too stupid to see it

David Dunning released his now-infamous paper “Unskilled and Unaware of It: How Difficulties in Recognizing One’s Own Incompetence Lead to Inflated Self-Assessments” in 1999. This is the paper that introduced the “Dunning-Kruger” effect, published in the Journal of Personality and Social Psychology.

You mostly left out discussing works of fiction. These are art, not merely information. It would be horrible if we stopped reading/consuming works of imagination. That's an essential part of being human.

As a spd rdng (speed reading) coach and author with 25 years in the field, I’ve watched speed reading evolve alongside technology. AI is doing for knowledge what electricity did for lighting – replacing candles with lightbulbs. Just as spd rdng lets people extract what matters and move fast, AI now delivers knowledge/wisdom instantly, bypassing books and people altogether. Yet books still matter: fast and slow, spd rdng and deep dives, are the yin and yang of real learning. For example: learning to juggle or perform a magic trick from a video activates rapid visual and motor connections, while using a book engages imagination, problem-solving, and deeper cognitive processing ('digestion' if you like), so each method taps distinct areas of the brain and senses.

The more you know, the faster you spot a signal in the noise – but now, with AI, access is close to immediate. We’re not losing books (just as people still use candles :), but developing illuminating, holistic new ways to learn. After all, if AI is to knowledge what electricity is to lighting – (speed) reading is knowing how to use the switch, but also how to read by candlelight or fire when the power goes out. And throughout history, it’s the fire of wisdom, with its longevity, that endures and illuminates, regardless of the tools at hand.