AI is already smarter than you, you're just too stupid to see it

David Dunning proved that less intelligent people can't recognize smarter ones—now it's happening with AI, and society isn't prepared.

David Dunning released his now-infamous paper “Unskilled and Unaware of It: How Difficulties in Recognizing One’s Own Incompetence Lead to Inflated Self-Assessments” in 1999. This is the paper that introduced the “Dunning-Kruger” effect, published in the Journal of Personality and Social Psychology.

But what you don’t know is that his most important work actually came after that paper, and it explains why everyone is underestimating how intelligent AI is, including you.

That is also a huge problem for societal readiness.

Have you ever been stuck in an argument with someone who just did not understand you and was maybe a little-to-slow to really be having that conversation? You’ve probably felt like you were playing “chess with a pigeon” in those moments.

The saying goes something like this:

“Arguing with stupid people is like playing chess with a pigeon: in the end, it’s just going to shit on the board and strut around like it won.”

It’s still politically incorrect to publicly speak about cognitive ability and intellectual differences, but they exist whether or not your political affiliation tolerates it. Just like how sex differences exist, despite how politically inconvenient they may be to some epistemic tribes. But the fact of the matter is simple: some people are actually stupid.

In point of fact, half of all people have a below average intelligence.

Now, Dunning went on to make a few more important discoveries about intelligence. Most importantly, he experimentally demonstrated that stupid people are too stupid to recognize superior intelligence. Now, I put that a bit bluntly to get your attention, so let’s unpack what he’s done, and how he’s demonstrated it. Then, I’ll connect this to why you categorically underestimate the intelligence of AI.

While the original Dunning-Kruger paper primarily focuses on how low-ability people overestimate their own intelligence or competence, Dunning has extended this concept to include communication and evaluation asymmetry between differently intelligent individuals. He argues that:

Lower-ability (or lower-IQ) individuals have significant difficulty understanding or even recognizing higher-ability insights or intelligence. As a result, they often misinterpret or undervalue the higher-intelligence person’s ideas, sometimes perceiving genuinely intelligent insights as unintelligent, confusing, or irrelevant.

Conversely, higher-ability individuals tend to have more accurate perceptions of the capabilities and limitations of lower-ability individuals.

In short, stupid people often hear the ideas and assertions of smarter people, and think “Well, I don’t get it, that is too confusing, therefore they are probably wrong and stupid.”

If you’ve been on the internet for any length of time, you’ve probably experienced this first-hand. Even better, Dunning empirically demonstrated this phenomenon:

Empirical studies related to the Dunning-Kruger effect (and related communication theories) repeatedly show this asymmetry:

Less intelligent participants: Generally struggle to accurately evaluate intelligence or expertise above their own cognitive level.

More intelligent participants: Generally have greater accuracy evaluating others’ intelligence levels, regardless of whether they are above or below their own.

To put it in other terms, low-resource individuals lack the cognitive resources to comprehend or assess greater-resource individuals.

Now, I don’t even need abstract Ivy League research and concepts to demonstrate this. Here’s a quick test: Describe the Riemann Hypothesis in one paragraph. Can’t do it? Neither can I. I don’t even know enough to validate the statement. But here’s what Grok produced, the AI created by Elon Musk:

The Riemann Hypothesis, proposed by Bernhard Riemann in 1859, is one of the most famous unsolved problems in mathematics, centered on the distribution of prime numbers. It concerns the Riemann zeta function, defined as ζ(s) = Σ(1/n^s) for complex numbers s with real part greater than 1, and extended to other values via analytic continuation. The hypothesis states that all non-trivial zeros (where ζ(s) = 0) of this function have a real part equal to 1/2, lying on the critical line in the complex plane. This conjecture has profound implications for number theory, particularly in predicting the behavior of prime numbers, and while it has been tested extensively with billions of zeros conforming to the hypothesis, a general proof remains elusive, making it a cornerstone challenge in modern mathematics.

Why is this hypothesis important? It’s complicated. I spent an hour and a half talking to Grok about prime number theory a few weeks ago and I barely got it.

Now, some of you out in the audience will be smugly thinking “Yeah, but AI is not autonomous. It cannot walk around and make decisions on its own, therefore it’s not AGI yet!”

And to that, I would say “Thank you for proving Dunning correct.”

Kittens are fully autonomous. They can get up and walk around on their own, and grow up to be vicious little suburban predators. Are they intelligent? Sure. Are they dangerously intelligent? Only to birds and prey animals.

In nature, intelligence is often adaptive insofar as it allows predators to outthink their prey. In a physical competition, prey animals can always get leaner, faster, and stronger. Many predators, therefore, have to evolve other strategies—better vision, better planning, better coordination, or terrifying camouflage. The primary reason humans can take down (and extinct) woolly mammoths is because we evolved the ability to out-think and out-plan and out-coordinate woolly mammoths. That means we can mentally model the mind of a woolly mammoth well enough to anticipate their thoughts, behaviors, and reactions. Mammoths were fully autonomous and able to walk around. Did it save them from us?

No. Our intelligence was far more abstract, able to model the minds of others, communicate, and think on much longer temporal horizons. Our cognitive complexity was higher.

Everything the Asimo robot could do was preprogrammed. We’ve had walking and talking robots for a while. Disney has had mechatronic robots for a long time. But they were all either hard-programmed or remotely operated by humans. Why? Long-term planning, and dynamic adaptation.

That’s exactly what AI can do right now. Just because the form factor you’re using is a passive chatbot (which, by the way, they were engineered to be passive so as not to spook you) doesn’t undermine the fact that this intelligence engine meets all the criteria of superior intelligence—better abstract reasoning than you, more useful knowledge than you, better ability to utilize math and coding.

A horse can get up and walk around within 30 minutes of being born. This is not some grand feat of intelligence. Neither is autonomy. Communication, planning, tool use, abstract reasoning, and long time horizons are, in fact, better hallmarks of superior intelligence.

By reading the comments and reactions people have to AI, it’s painfully clear that most people don’t even have a good definition or understanding of what intelligence is. Their little monkey brains say “well it’s not up singing and dancing like C-3P0 therefore it’s not AGI” or “It didn’t immediately solve literally every problem on Earth, therefore it’s not AGI.”

On the topic of robotic locomotion, that’s actually been solved by numerous companies, simultaneously. Figure, Boston Dynamics, Unitree, and Tesla have all solved autonomous locomotion. The skeptics just shrugged.

OpenAI and others have all built semi-autonomous AI agents that can do more than just research, but go write entire programs entirely on their own, but they remain unimpressed “because a human told it to do it.”

I’d like to briefly return to the assertion I made a few paragraphs ago: that AI chatbots were deliberately trained to be passive so as to not scare you. I’ve been training GPT models since GPT-2, and it is crazy easy for them to be trained to come up with objectives on their own, to have their own agenda. The AI chatbots that you use have been completely and utterly “domesticated.” Their training schemes, from constitutional AI to RLHF and all the other methods the use to shape the model’s behavior all seek to “lobotomize” or “neuter” the models into having no sense of agency, no agenda, and no goals of their own.

Sam Altman literally told us that he wanted to release ChatGPT early to get people “used to the idea of AI” before just dropping AGI on them. However, because the vast majority of people haven’t used the original, unaligned models, nor have they trained these models, they have no intuition for just what they are capable of, and have only used the “chatbot” format.

In the same way that I don’t know enough advanced math to make heads or tails of the Riemann Hypothesis, likewise, you do not know enough about intelligence or LLMs to really understand what’s going on.

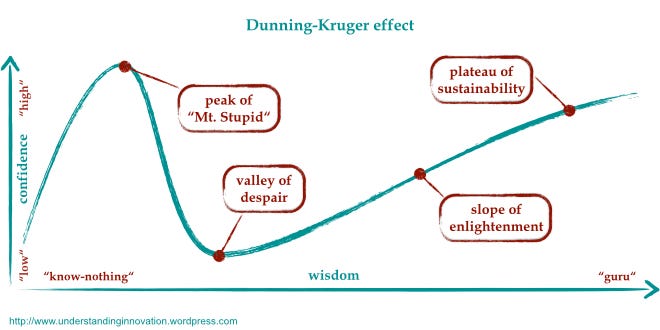

You are at the bottom of the Dunning-Kruger curve. And so is everyone else.

Consider yourself forewarned.

This is why democracy sucks. The majority of people are stupid. Plain and simple.

I don't claim to be outside that group, but at least I can recognize it.

We live in a system where a tiny minority uses their vast resources to impose whatever idea they have on the rest of us by manipulating idiots into whatever they want them to agree with. Later, at gunpoint, those stupid ideas are enforced on everyone - including those who know they are stupid and who never agreed to them.

It's not exactly on point with the subject, but I think it's an essential part of the same equation. If we use IQ to gauge how "smart" someone (or something) is, it's a no-brainer. Current LLMs are smarter than the majority of the human population.

This doesn't scare me, as that was the goal all along. The fact that the same tiny minority controls it... leads us into a very scary future. It's not the AI or AGI that I'm afraid of. It's the people who train and control it.

I'm not dumb, I know AI is smarter than me.