We've Hit Superintelligence Escape Velocity (ASI)

I suspect there are upper bounds to ASI, but we're going to hit that milestone real soon here.

Let me state this as plainly as possible right up front: if I understand what’s going on, it means that we are presently in the inexorable leadup to ASI. Let me explain it in a few graphs.

First up was provided by Ethan Mollick

Let me unpack this graph first, because what this implies is extreme, and then I’ll explain how they’re achieving this.

The GPQA graph reveals something profound: AI models are demonstrating generalization beyond their training distribution. While previous models could only reliably answer questions similar to their training data, these scores show the models solving complex problems that could not have been present in their training sets. When an AI system achieves an 85%+ score on tests designed for specialized PhDs, it cannot simply be pattern matching against memorized information—the space of possible doctoral-level questions is too vast, and many test questions explicitly probe novel scenarios. The exponential trend line, which shows no sign of plateauing even at human-expert levels, implies these models have developed genuine abstract reasoning capabilities that allow them to solve problems they’ve never encountered before. This is fundamentally different from traditional machine learning which struggles to generalize beyond its training examples. The consistent upward trajectory on increasingly difficult problems suggests we’ve unlocked a core mechanism of intelligence itself—the ability to reason about entirely new scenarios using abstract principles rather than memorized patterns. This represents a phase transition in AI capability that few thought possible this soon.

In short, “it can invent new physics.”

Keep in mind that o3 is the model that OpenAI is currently rolling out to beta users right now. Some of my friends and insiders are presently losing their minds over o3’s capabilities, and I’m starting to see why.

Now, to understand why I am saying we’ve achieved “escape velocity” is this graph, provided by Louie Peters:

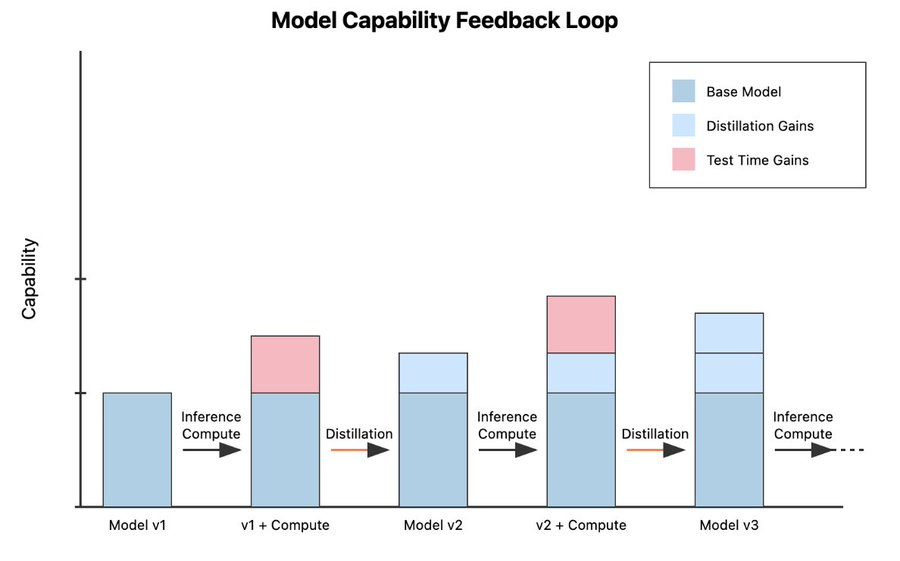

Let me explain this graph now:

The model capability feedback loop graph reveals the fundamental mechanism behind rapid AI advancement. When an AI model reaches the limits of available training data, it can synthesize new knowledge through a process called knowledge distillation. This process involves using a larger “teacher” model to train a more efficient “student” model, achieving roughly 10x parameter reduction while actually improving capabilities. The student model crystallizes and refines the knowledge of its teacher, similar to how human knowledge advances across generations of scholars. But the real breakthrough comes from combining this with inference-time compute scaling, where models achieve dramatically better performance simply by being allowed to think longer about problems. This creates a powerful oscillation: first distill knowledge into a more efficient form, then leverage increased compute time to push capabilities even further, then distill those new capabilities into the next generation. The graph shows how each cycle compounds these gains—distillation provides 10x efficiency improvements while preserving or enhancing intelligence, while inference-time scaling delivers up to 1000x effective improvements in model capabilities. This creates a self-reinforcing cycle that transcends the limitations of traditional training data. We’re no longer constrained by the finite amount of human-generated content available for training; instead, we've discovered a way to bootstrap increasingly capable systems through this alternating process of compression and expansion.

So let me lay it out for you plain and simple:

Models today have an IQ of about 130 (give or take). This is the IQ of your typical top-notch doctor or lawyer.

It looks like o3 is one standard deviation above that, so an IQ of about 145 across all domains. This is the IQ of those one-in-a-thousand kind of top performers.

The next iteration of this, which we should expect in about 18 to 24 months, would have an IQ equivalent of around 160, which is more like Einstein, Oppenheimer, and Feynman, but across all domains.

I am not being hyperbolic.

So does this mean we’re heading for an intelligence explosion? Will ASI have an IQ of 2000? Probably not, here’s why.

There is likely a ceiling or an upper bound of useful intelligence. We have plenty of reasons to believe that there are mathematical limitations to “useful intelligence.”

There are indeed strong mathematical hints that absolute, unbounded intelligence may be unattainable, at least in any practically “useful” sense. Gödel’s incompleteness theorems show that formal axiomatic systems cannot be both complete and consistent, indicating that no single framework can capture all truths. Turing’s Halting Problem further demonstrates that certain questions are provably undecidable by any algorithm. Complexity theory suggests that computational resources balloon exponentially for many problems, constraining the capacity of even vastly sophisticated minds. Taken together, these limitations imply that ever-increasing intelligence confronts irreducible gaps and intractable complexities, so while clever heuristics and sheer processing power can stretch the boundaries of what is knowable, there is no obvious route to escaping the fundamental ceilings that mathematics and computation impose.

I personally liken this to supernovae, which have a maximum brightness. That’s why they are called “standard candles.” So, if I’m right, then we’re going to hit full ASI within about 2 years, maybe 3 or 4 if you want to wait until they have an equivalent IQ of about 185, which is insane.

Beyond that, the universe is simply mathematically too complex, with too many things that are intrinsically unknowable and unpredictable to really benefit from higher intelligence (if it is even theoretically possible).

So what comes next?

Speed and efficiency is what comes next.

Let’s imagine that I’m right, that there are indeed diminishing returns to more intelligence, that there’s a sort of upper bound to useful intelligence, and so beyond a certain point, you’re not going to get anymore utility. What then? It comes down to time and energy. Think of it this way, if this IQ 185 machine requires a gigawatt of juice to run, it’s still not very efficient or useful. That energy would be better used on humans. But what if you can get it down to a megawatt? Or a kilowatt? Or a few hundred watts of juice? Now you can scale it laterally and end up with millions or even billions of clones.

More energy efficiency also generally means “it runs faster.” So if you’re caught in an arms race between ASI-equipped nations, it’s not necessarily just whether or not you have ASI, but how many you can run in parallel, and how fast they operate. Beyond that, it’s also a question of how well the coordinate, and how autonomous they are.

I feel like I’ve been talking to a wall but I’ll say it again we are not ready I need people to understand this somehow but so far I’ve had little success I feel like there’s been some sort of change tho not enough to make a true difference but thanks Dave for the insights

Great article. The power of humanity is the imperfection of billions of different minds with different values and agendas that push and pull to outcomes (good or bad). I don’t see how AI could be any different - and you’re hypothesis seems to be spot on with that theory.