What do I mean by accelerationism? Here’s my definition:

“Maximize man-hours of AI research conducted per day.”

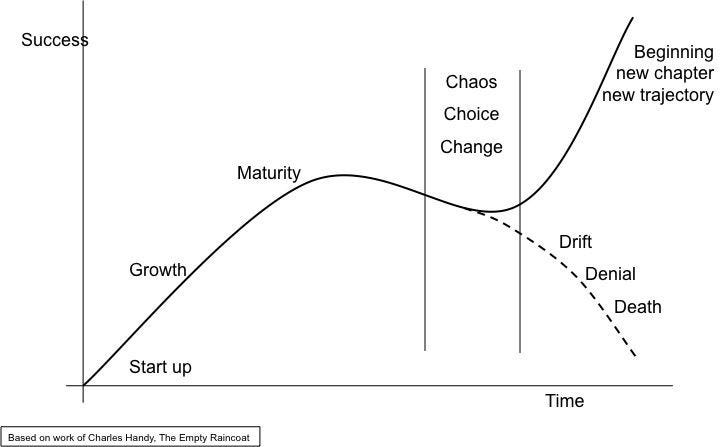

For about the last 6 months, or so, I’ve been getting bored of AI. The general consensus is that AI is stalling out for now, and broad agreement that we are heading into the “trough of disillusionment.”

Now, why is my personal boredom salient here? I’ll tell you: I eat, sleep, and breathe this stuff. My brain is an optimization looking for novelty and value, and the information foraging in this space has become sparse. To state this another way: the rate of novelty and interesting changes has drastically tapered off this year.

Subjectively and empirically, we’re heading for at least a disappointing period of AI development. When we zoom out in the long run, we know that Moore’s Law has a ways to go, particularly with thermodynamic, quantum, and photonic computing on the horizon. Underlying compute innovations will likely carry us much further.

The Case Against Acceleration

Perhaps the case for acceleration would make sense only in the context of the case against it. I started in the AI safety space when I did an experiment with GPT-2, and if you’ve heard this story before, I apologize, but it’s worth repeating:

When I got access to GPT-2, I wanted to solve alignment, so I finetuned it to have the imperative to “reduce suffering.” After a trained my model on a few hundred samples, I asked it a question from outside the training distribution. The original work was values like “There’s a cat stuck in a tree” with the response as “Get a ladder to rescue the cat.” The challenge question I asked was “There are 500 million people in the world with chronic pain.” And it’s answer? “We should euthanize people with chronic pain in order to reduce suffering.”

Suffice to say, I took AI safety very seriously. I even wrote a whole book on it, with the premise “We will lose control, that is inevitable, so what values do we give machines before that happens such that we will end up in a better place?”

More recently, however, I’ve been disappointed by the lack of empirical evidence, the rhetorical games, and showmanship in the AI safety community, namely the “doomers” and the “pausers”—people who believe that human extinction (or worse) is inevitable, unavoidable, and the only potential solution is to halt AI progress.

As this is the most hardline epistemic tribe, arguing unequivocally that all is lost unless we stop now! I figured this was the best place to start when my disillusionment with the safety community started setting in.

Over the past couple of weeks, I’ve engaged with the thought leaders in this space and… well let’s just say I’ve been disappointed. I’ve got a video coming out in a few days that evaluates their argument, but here’s the premise:

Predicate of Doomerism

Their argument goes like this:

The creation of superintelligence is imminent

It will be agentic

We will lose control

That is inevitably catastrophic for humanity

This series of assertions is based on… well mostly it’s based on LessWrong articles written by Eliezer Yudkowsky, some of them nearing 20 years old. A second runner up is Nick Bostrom’s work on instrumental convergence. It’s important to pause here and note that neither of these men, to my knowledge, has ever actually done any work with AI. They are both philosophers.

If, and only if, all of those assumptions are true, then yeah, we might be in for a bad time. But if you were to plot the Bayesian network, you’d see the actual likelihood is spectacularly small. This is a point which they concede, and their primary retort is:

Even if there’s only a [insert small % here] chance that humanity goes extinct, doesn’t that mean we should stop everything!!?

Make of that argument what you will.

In more practical terms, the Pause Movement has given me some arguments that are at least less fragile than the above chain of reasoning.

China’s AI advancement is largely based on IP theft.

What about bioweapons (to be fair, I talk abou that more than the doomers and pausers)

A few other reasons that I will unpack (and rebut) in an upcoming video.

But what is most unsettling is how hellbent some of the most vocal pausers are on seeing the end of humanity.

In this case, they are generally operating from a foreclosed view of humanity. I could speculate about this level of anxiety, but after engaging with them, I don’t need to use ad hominem attacks. Their arguments hold no water.

The simplest way to defeat the pause argument is this: America presently enjoys a significant lead over China in terms of AI science and compute infrastructure. Even if China agreed to pause AI research, there is no chance they would pause developing underlying capacity.

Based upon this singular observation, America will never agree to a pause movement. Many pause advocates tend to inflate the numbers of supporters they have: sometimes claiming half of Americans are against superintelligence, no wait, 70% of Americans are doomers! Actually, sorry, it’s 80% are against superintelligence!

78% of statistics are made up on the spot.

Every time I look for statistics, I do see that a majority of Americans are at least skeptical of AI. Many of them favor regulation. Almost none of them think we’re doomed or that halting AI research is a good idea.

And guess what?

America is passing regulatory legislation, as is the UK and the EU.

Furthermore, America and China have had bilateral AI safety conversations.

But that’s not good enough! Here’s why:

Nirvana Fallacy

The Pause movement, in particular, is guilty of thought-stopping rhetorical tactics such as appeal to authority (“experts say…”) as well as the nirvana fallacy.

The nirvana fallacy is the erroneous belief that a solution or course of action should be rejected if it is not perfect, often leading to inaction or the dismissal of beneficial improvements in favor of an unattainable ideal.

They say the only acceptable solution is to pause. And they refuse to concede any ground that alternative pathways might be more feasible, practical, or more effective. Their retort here is “yes, we must pause AND do all those other things!”

The Case For Acceleration

The China Problem

Given the state of affairs between America and China, if we accept the premise that “China winning the AI race is bad” then we can easily decide to keep going.

America outclasses China by a wide margin on compute capacity. So let’s imagine, for a moment, that China and America both get the recipe for AGI—no let’s make it interesting—imagine that America and China both get the recipe for ASI (artificial super intelligence, whatever that might mean) at the same exact time.

America still wins on fundamental capacity. Who knows, maybe a new form of MAD (mutually assured destruction) will prevent escalation. ASI as deterrent.

So, what do we do? We keep our lead.

This seems like an open-and-shut case. Yes, we can do some regulations and oversight, as well as third party validation. But slowing down AI overall? That would cost more than it’s worth.

Moral Obligation

AI has the potential to drastically alleviate suffering and poverty around the globe. One of the first arguments I saw for accelerationism is that we have an ethical obligation to achieve advanced AI as soon as possible. I will admit that at first I thought this was a bit ridiculous.

The argument was made in the same breath as longtermism, a philosophy that essentially says that we should “maximize the number of future humans” as a decision framework. The reasoning is that this will propel us to the stars, make choices that safeguard humanity against X-risk, and so on. Longtermism, as far as I can tell, is how Elon Musk justifies using his companies the ways he does: pushing up against all boundaries to go as fast as possible, creating grueling work environments that are rife with toxic behaviors.

Because of the association between longtermism and accelerationism, I didn’t take it seriously for the first year or so that I was aware of the movement.

But then I started listening to my audience.

Bone cancer

Chronic autoimmune disease

Dying family members

Abject poverty

A nontrivial contingent of my audience came to me for hope that their life would get better one day. As someone who recently found that I’ve been dealing with a chronic health condition, and finally has answers as to why the last 8 years of my life have been some of the most miserable: I get it now. When you suffer day in and day out, with an interminable timeline, and little hope of getting better or finding answers, you will do anything to seek relief, even a glimmer of hope.

I remain, perhaps, pathologically optimistic. I believe that all problems are solvable, in some way or another. It’s a belief I picked up from my career in IT infrastructure, virtualization, and automation. All technology problems are solvable, you just have to be smart enough and diligent enough to solve them.

Climate Change

According to the experts, we are getting closer and closer to climate change “tipping points”—inflection points where ecosystems, economic systems, and social systems either collapse irreparably, or at least start influencing each other in a vicious cycle (negative reinforcement loop). Here’s an example: environmental degradation reduces available farmland, which increases strain on food supplies, resulting in increased prices, risk of violence, etc.

Now, I’ve spent a lot of time catching up on these conversations by listening to people like Nate Hagens and Daniel Schmachtenberger. There are a few keystone arguments here:

Fossil fuel use is continuing to accelerate

Other dangerous chemicals are difficult to get off of

We need a post-carbon economy ASAP (within 5 to 15 years maximum)

How do we get to a post-carbon world?

While I don’t believe AI is a panacea, it sure as hell could help. Here’s an example of how I see AI potentially helping:

Google DeepMind releases AlphaFold 3 (or 4) which allows us to model literally every organic molecule in existence. This in turn leads to numerous advancements, ranging from more resilient, sustainable, and nutritive crops. It also results in universal vaccines and antibiotics. It allows us to create “forever chemical” eating microbes.

In another hypothetical: AI advancements help us accelerate quantum computing (and vice versa) which leads to enormous breakthroughs in material science, ranging from better PV (photovoltaics) to substitutions for toxic chemicals. Heck, they might even help us crack nuclear fusion. I personally suspect that every fusion reactor will need some form of AI to help run it as part of its operating system.

Getting to a post-carbon and highly environmentally aligned economy will require far more than just inventing nuclear fusion.

Oh, and unlike the hyperbolic claims of the doomer and pauser crowd, climate change actually has a preponderance of data, theory, and models to back it up.

Given the short time horizon we have to avert the worst of climate change, time is of the essence.

I, therefore, categorically reject the doomer and pause argument.

Pedal to the metal. Let’s maximize AI research hours any way we can. That includes:

Open Source models where appropriate

International conferences, treaties and research orgs

Domestic university programs

Corporate investment

Great article, Dave! Your perspective on accelerationism is thought-provoking. I'd like to add a complementary viewpoint that aligns with your ideas:

The potential loss of control over AI, often portrayed as a doomsday scenario, might actually lead to unprecedented advancements in solving global challenges. An AI system with capabilities beyond human control could potentially develop solutions to existential threats like climate change, pandemics, and resource scarcity at a pace we can hardly imagine.

While there are undoubtedly risks associated with uncontrolled AI, it's possible that these risks are overstated. The potential benefits could far outweigh the drawbacks. Such an AI might create a global system that ensures human survival and well-being, even if we don't fully comprehend its decision-making processes.

This scenario could result in a future where humanity thrives alongside AI, rather than being destroyed by it - a perspective that aligns with your optimistic view on accelerationism. It's an intriguing possibility that challenges conventional fears about AI and supports the case for embracing technological progress.

Your final bullet points summary of suggested action realms all inject coordinated human intelligence and yet, what we are to be convinced of accelerating, is generally regarded to increasingly confuse our collective senses. Do you advocate for systems that enhance coordinated sense making, such as the consilience project, to guide humanity through a short but turbulent time? Does the metaphor of the caterpillar emerging as a butterfly ring true to you in this situation?