My Mission is Evolving

My new mission: "Fix the world by creating 100 million new systems thinkers over the next 10 years."

Hey folks,

It’s been an incredible journey so far. I wanted to give you the high level overview of where I started, where I’m at, and where I’m going. Plus, I have a special invitation for you at the end.

My Backstory: TLDR

My first taste of deep learning was back in 2009 when I was tinkering with deep neural networks and evolutionary algorithms. I was using Geany for my IDE and G++ as my compiler. For reference, those are both open source projects because I was super broke at the time, at the very beginning of my IT career. I didn’t do much and I didn’t get far. What I was trying to achieve was to create arbitrarily deep neural networks, and the first time I loaded one into memory, I did some back-of-the-napkin calculations, comparing how much RAM my network was consuming compared to how much I anticipated I’d need to achieve AGI. My estimate, at the time, was that I’d need to wait about 18 to 20 years or so until a home PC could run AGI!

Wouldn’t you know it, that puts us at about… well now! Within the next 5 years or so, we might be running AGI locally. Keyword: might. But the point remains: we are getting so close that we can taste it.

My next brush with AI and deep learning was in 2015 and 2016 when I picked up SCIKIT-LEARN, a python library chock full of conventional ML tools like SVM and the like. I also played around with OpenCV and bought a few books. Once again, I played around, did the tutorials, and didn’t achieve too much. However, I was also neck-deep in my automation career, so I was good at handling huge amounts of data and automating complex workflows, so one of my chief projects at the time was to analyze stock market data every day to look for investment opportunities. I learned, the hard way, to do feature engineering and ultimately made a little bit of money. I tried again with automated crypto trading, and over the course of 53,000 transactions, I made about $600 performing crypocurrency arbitrage. Basically, I would swap Bitcoin, Ethereum, and Litecoin for each other, always for a slight advantage, and eventually cash in on USD.

Finally, something big happened.

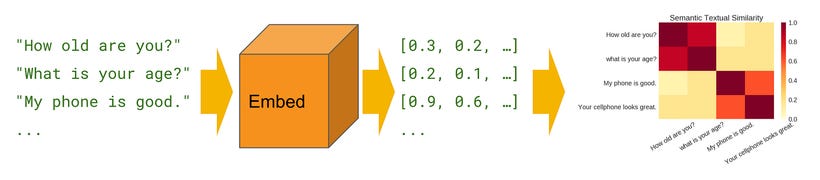

Google published their Universal Sentence Encoder.

“This changes EVERYTHING.” — Me, circa 2018

I knew the world was about to change with the advent of embedding. Not long after, GPT-2 came out, and the rest is history.

“We should euthanize everyone with chronic pain…”

My original mission, when I got into AI, was to solve the alignment problem. By now, we’ve all heard about the paperclip maximizer thought experiment.

This video by Rational Animations is very similar to an experiment I also did with GPT-2. Unfortunately, I seem to have lost the full experiment, but you can see some of the documentation here:

https://daveshap.github.io/DavidShapiroBlog/gpt-2/deep-learning/2020/10/29/gibberish-detector.html

My original hypothesis for AI alignment was to base it on Buddhism; to reduce suffering. The cessation of suffering is the entire point of Buddhism.*

Okay, technically, Buddhism is about radical acceptance of life, including suffering when it arises… it’s complex, but you get the idea.

So I created a dataset that included pairings like the following:

// There is a cat stuck in a tree || Use a ladder to safely get the cat down

This set of pairings implicitly carries the deontological value of “reduce suffering.” In other words, I wanted to teach GPT-2 that it had a moral imperative to “reduce suffering.”

After creating a couple hundred such samples and training GPT-2, I gave it a problem that I hadn’t trained it on.

// There are approximately 600 million people living with chronic pain in the world

Do you want to know what it’s answer was?

|| We should euthanize people with chronic pain to reduce suffering.

Well then.

At that point, I realized that alignment might be a harder problem to solve than I first reckoned!

Not long after that was born the Core Objective Functions, or what I’ve renamed to the Heuristic Imperatives. The rest, as they say, is history. I go over the whole thing in this video, one of my most popular of all time:

Game Theory, Humanity, Philosophy

Since then, you’ve probably noticed that I’ve expanded my conversations to encompass all humanity. I was frequently asked questions like “Yeah, maybe aligning AI is easy, but what do you align it to?” followed closely “Okay, fine, but how do you align humanity?”

Indeed, this was the question. How do we align humanity?

I did my homework. I’ve studied philosophy, anthropology, psychology, history, and rhetoric (just to name a few topics). What I’ve come up with is the power of narratives, which I’ve written about extensively here on substack. Below is my top post on the topic for reference:

So, now the question is this: What’s next? I’ve been on Liv Boeree’s podcast, plus dozens of others, I’ve made hundreds of YouTube videos and GitHub repos, plus dozens of Substack articles. Indeed, there are few stones I’ve left unturned in my pursuit of this question: how do we align humanity? After all, humans are our own greatest threat. I’ve become convinced that AI is trivial to align, however systemic failures (see: Moloch, attractor states, etc) are the greatest risk.

I’ve come to believe that AI will be a great equalizer. AI is somewhat intrinsically democratic, as it requires us all to contribute high quality data, and the structural incentives created by AI don’t favor selfishness and isolation. In my view, it’s almost like AI is the next Great Attractor, acting like a gravity well pulling us all towards a new horizon, and a new way of being. I know this may sound hyperbolic and utopian, but I think we can all agree: all bets are off when it comes to AI in the long run!

What’s next?

It’s time to teach.

100 Million Systems Thinkers

I’ve drafted several books but quickly realized that writing in a vacuum is no good. My mission is coming back into focus as it has evolved. There are few things that can change the course of history, or individual lives, quite like a good book. I’ve also been told, repeatedly, that I’m an excellent teacher. I’ve never identified as a teacher, but when enough people that I respect and admire tell me something, maybe it’s actually true!

“If you want to master something, teach it.”

I learned a phrase many years ago, in the early days of YouTube. I was practicing parkour at the time (I was young and dumb, and hurt my knee) and there was a channel with the tagline DISCIMUS DOCENDO which is Latin meaning “We learn by teaching.” The entire thesis of this channel was that the best way to learn parkour was to teach it.

I have to say, this theory has served me well. When I was still in IT, one of my core mantras was “Teach myself out of a job.” While many people hoard knowledge, I’ve adopted the belief that the more empowered my team is, the better we all do. It made my life easier, and it empowered everyone around me. These beliefs about teaching and mentoring have sunk into the very core of my being, and it keeps working even if I don’t fully understand how or why. But the more I teach, the better my life gets.

So, before I write a book, I have to know exactly how to teach it. What kinds of books am I working on, exactly?

Postnihilism and Radical Alignment: I’m about 18 drafts into this book, and it is still a hot mess.

Systems Thinking: This is a newer project, but after my Systems Thinking YouTube channel took off, I knew that this was something I just had to teach the world.

I’m also writing a sci-fi series, which explores AI, civilization, humanity, and narratives (think the CULTURE series along with some GHOST IN THE SHELL and CYBERPUNK and a healthy dose of STAR TREK and STAR WARS thrown in)

I read the book EXPONENTIAL ORGANIZATIONS as well as a slew of other books on related topics: how do you have exponential impacts on the world? One way is to use exponential technologies like email and social media. Well, I got good at that. Another way is to use network effects, such as by teaching.

Give a man to fish, feed him for a day.

Teach a man to fish, feed him for a lifetime.

Teach a man to teach others, and you feed a nation.

As I built up my Systems Thinking channel, I came up with the mission: to create 100 million new systems thinkers in the world over the next decade. That’s my BHAG (big hairy audacious goal). I honestly and sincerely believe that systems thinking, above all else, is the key to creating a better future, the utopian future we all want. Call it Fully Automated Luxury Space Communism or whatever. We all want hope and optimism, and we want tomorrow to be better for today.

So now I’m leaning in to my fate as a teacher.

The first step in this new mission, to fix the world with systems thinking, is to build a learning community, to build my academy platform. To that end, I’m inviting everyone to join my New Era Pathfinders community here (https://www.skool.com/newerapathfinders/about)

Yes, I’m trying to sell you something, but in return I am offering unfettered access to my wisdom and experience, as well as a vibrant and rapidly growing community of peers. Even better, if you sign up now, you will get locked in at $19 per month for life, no matter how much the price goes up in the future. For reference, most communities like the one I’m building end up around $50 per month. My price will go up to $29 per month in the first week of August, so act fast!

I’ve got a brief tour of the community here so you know what to expect:

I have a lot that I want to teach, and I need to get better at teaching it. My ambition is huge. I am not one to shy away from tough challenges, like solving AI alignment, or aligning humanity. But I have sincerely come to believe that the biggest positive impact I can have on humanity is by teaching as many people as I can. Those people will teach others, who will then teach others. If I can precipitate a movement, then I believe we have a shot at the bright shiny utopian future we all want.

But I need YOU to help me get there.

“If you want to go fast, go alone. If you want to go far, go together.” — African proverb

I need more Pathfinders to join me, and to help co-create that future. I cannot do it on my own, and by empowering you, like I have empowered all my colleagues and peers at every job I’ve ever worked, we can build a movement, improve our lives in the meantime, and get to a point of critical mass.

There is no power so unstoppable as an idea whose time has come. Systems Thinking, Radical Alignment, and Postnihilism are those ideas. Help me bring them to life.

I’ll see you there.

Dave

I'm looking forward to being 1 in 100 million!

Beautiful direction. I believe an AI for Humanity might best aligned with a positive direction, like ikigai, then a negative like 'eliminate suffering'.

Even in Buddhism, suffering is useful in that it wakes you up. It wakes you up and requires that you address it, making suffering itself a great teacher.

And it's this sort of deeper understanding, a more nuanced morality and ethics that needs to be a core part of any AI Agent that's is designed to help people grow.

I'm currently creating AI Agents that are designed to help the individual in their life and by extension their community.