AI Slow vs. Fast Takeoff, Defined

Cut through the rhetoric: what people mean by each, why benchmarks keep saturating, and how super-exponential autonomy reframes the next few years

The term “fast takeoff” was actually coined by Yudkowsky (the famous anti-AI Doomer) in the early 2000’s as he was thinking through different future scenarios. The original meaning was pretty sci-fi:

FAST TAKEOFF refers to a scenario in which an AI transitions rapidly from roughly human-level intelligence to vastly superhuman intelligence, with the change occurring over a very short period—potentially days or even hours.

This was clearly inspired by Skynet and The Terminator—the reasoning was “once AI becomes sentient/self-improving, you immediately get a cascade and lose control.” It should be noted that no one with any technical education or hands-on experience with AI thinks this is remotely feasible.

However…

I am one of the people who believes in a more modern “fast takeoff” scenario, but if you had asked me to define exactly the historical context and meaning of “fast takeoff” before writing this blog, I’d have described something more like: “AI will continue to progress exponentially and unabated.”

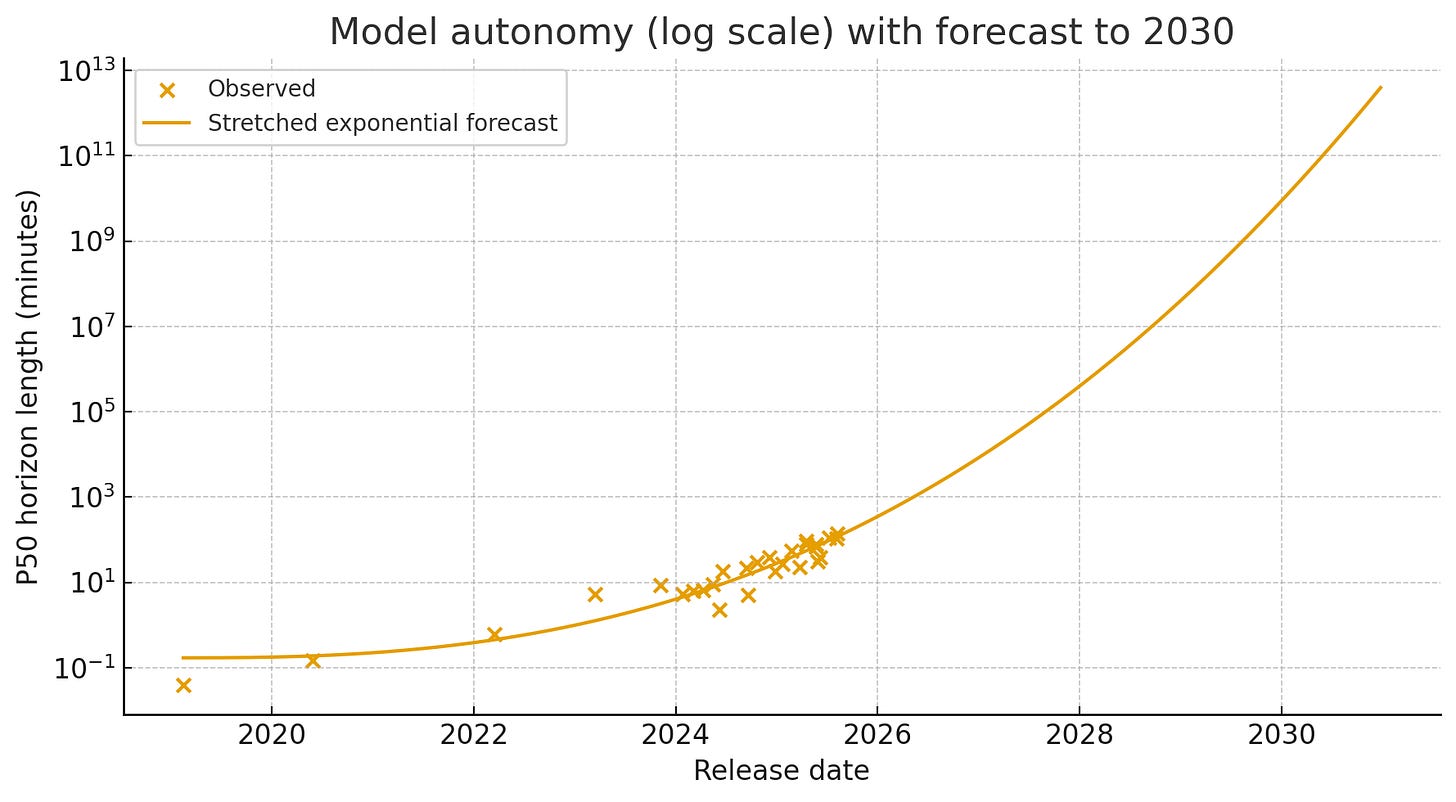

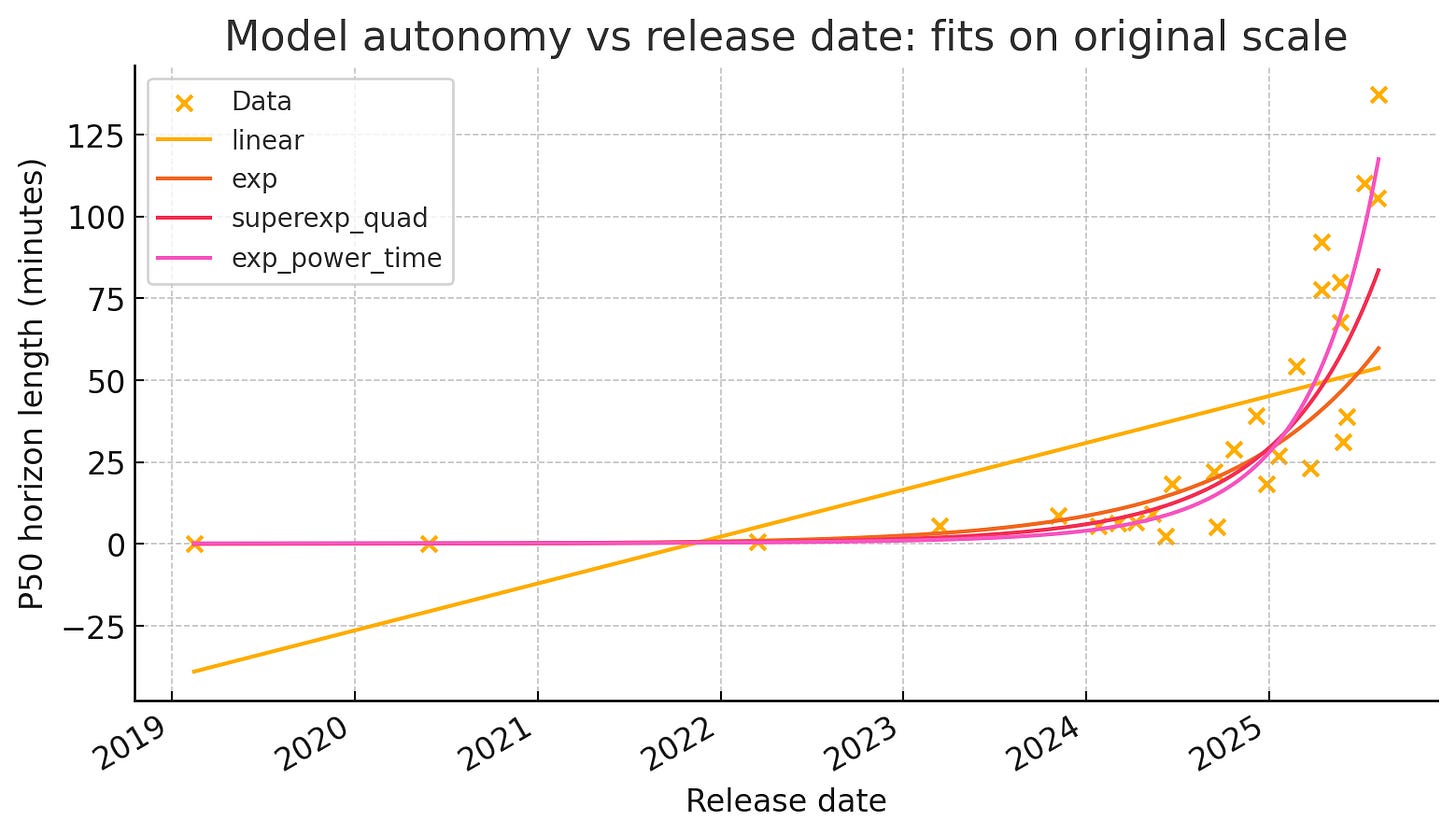

In point of fact, AI is progressing super-exponentially on some metrics.

The above data comes from METR’s model autonomy survey. When you model this data on a super-exponential curve, it fits markedly better than a simple exponential or logarithmic curve. This data means that by mid-2027, AI models will be succeeding at a 50% rate on tasks that are over 850 hours. The following year, in the middle of 2028, that number rises to nearly 60,000 hours.

Even more interesting: whispers from the field suggest this data undersells what AI can already do. The reason is partly user skill, but another reason is that this data come from raw model behavior, NOT agentic frameworks. In other words, the underlying AI models are getting better at a super-exponential rate, and that’s before additional guardrails and architecture!

Not to get lost in the weeds.

This data, to me, counts as “fast takeoff” even though it plays out over the next 3 years. Never in the history of humanity have we seen a technology improve this rapidly.

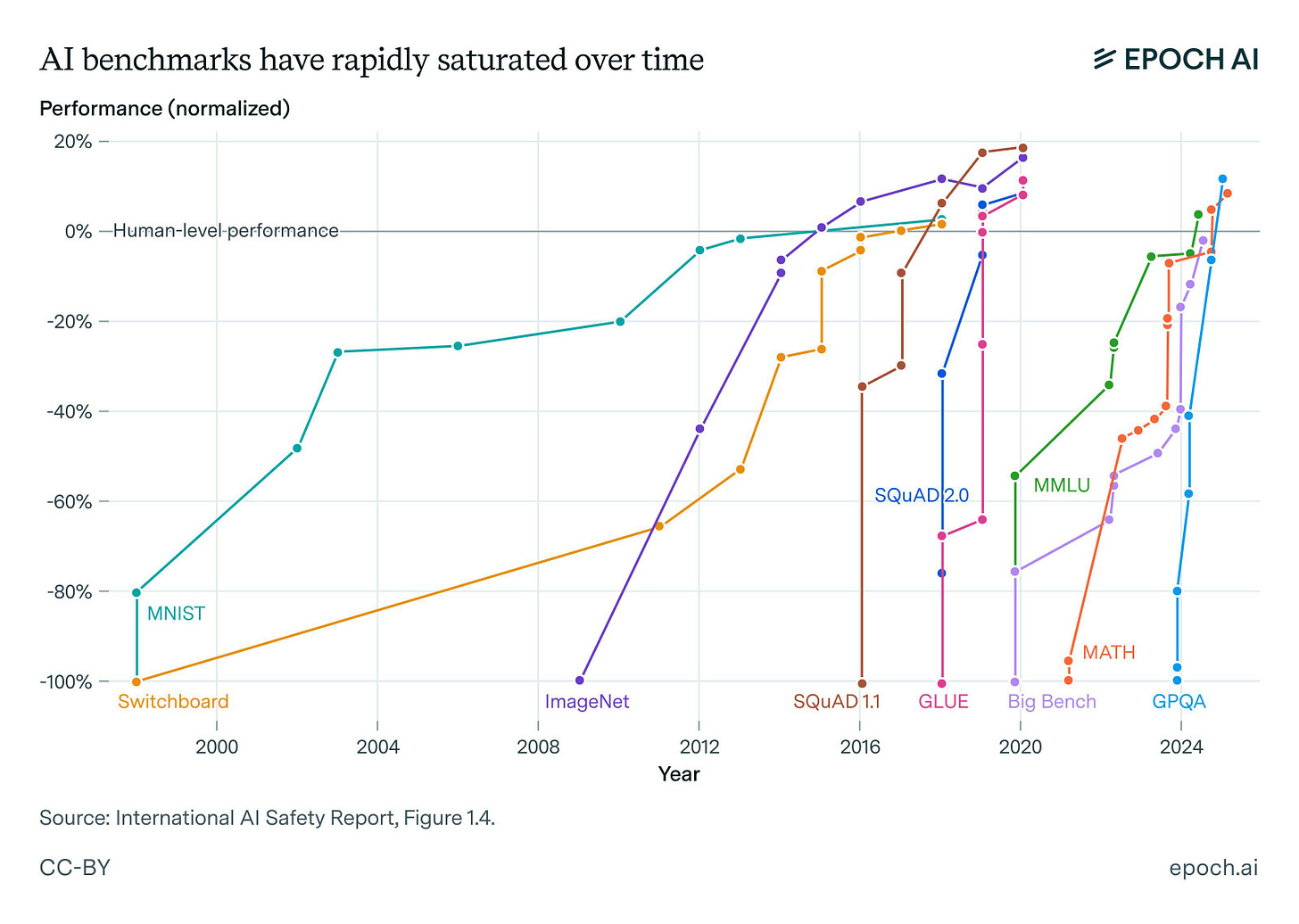

Now, the problem with popular terms like AGI and fast takeoff is that it causes people to miss the forest for the trees. Ask 10 people what “AGI” means and you’ll get 10 different answers. Full autonomy. Sentience. Can do everything a human can do. Can walk and talk. The problem is that this causes people to overlook or downplay AI capabilities today. The ‘best’ (funniest) definition of AGI that I heard was from a computer scientist who said “whatever computers can’t do today.” This describes the constant goalpost moving everyone has noticed. I described it here: AGI is more of a mental archetype than anything technical.

Likewise, when “fast takeoff” can mean anything from “Skynet suddenly breaks out of the lab in a matter of minutes or hours” to “model autonomy is rising super-exponentially over the next few years” the term basically becomes useless and meaningless.

To borrow from ancient Chinese philosophy: we do not have a ‘rectification of names’

In Chinese philosophy, the first and primary job of philosophers is to ‘rectify’ names (achieve consensus on terminology). Without consensus on terminology, how can anything be achieved or discussed?

The problem here is that “fast takeoff” is way easier to say and type than “super-exponential progression of model autonomy.” I’ve tried to coin other terms that describe specific phenomena like FARSI - “Fully Autonomous Recursive Self Improvement” but that term is problematic for obvious reasons. Beyond political correctness, it’s simply not a sticky term.

“Sticky” refers to a quality in which something lends itself to memetic transmission. Internet forums, ranging from LessWrong to 4chan to Twitter are “meme factories” where ideas and terms are rapidly tested for stickiness (aka ability to form a “mind virus”).

What about Slow Takeoff?

If “fast takeoff” approximates (for me) to “a few years of ramp up” plus another couple of years of integration and business uptake, then what would “slow takeoff” look like? Again, ask 5 different people and get 6 different answers.

For some skeptics and luddites, “slow takeoff” would mean “True AGI is decades away” which doesn’t seem like a rational or defensible belief to me. One must truly bury one’s head in the sand (or be spectacularly ill-informed) to reach the conclusion that AI will not categorically surpass human intelligence within the next decade as, across many benchmarks, it already has!

The problem could be chalked up to postmodernist and post-structuralist thinking. Words don’t have any intrinsic meaning, and all truth is relative. But that’s like calling a tsunami an inconvenient amount of water. It doesn’t explain the underlying why.

Whenever something new comes along, it has to be metabolized by society. We have to make sense of it, which is a collective effort. The noosphere or exocortex (whichever you want to call it) has to process the new ideas, experiment with them, coin new terms, and litigate meaning.

We do this in public, largely on the internet now. People argue, write blogs, produce video essays, and tweet at each other until the novelty wears off. Once the novelty is gone, the chips have settled and we have a better understanding of what something means (and what it doesn’t mean!)

Technology, in particular, tends to evoke a special kind of catastrophizing.

Cheap novels read for pleasure (penny dreadfuls) were going to destroy Victorian society

Trains would cause the human body to explode

Telephones would cause women to have psychotic breaks

High voltage powerlines will cause cancer

So on and so forth.

In my professional estimation, this can be summarized as: chimpanzee brain think new thing spooky.

Until we acclimate to new things, it feels dangerous, scary. Uncertainty is an unknown quantity. How “good or bad” something is could be reasonably defends as entirely up in the air.

To my more excited fans, all of this counts as “slow takeoff”—who’s got time for business cycles, SCOTUS decisions, and litigating the meaning of AI in public? We need solutions now!

Happiness is often determined by how much reality matches your expectations. AI X-risk believers are by and large depressed or angry at the current progress of things. Why? Because it’s all happening too fast. AI skeptics and luddites are unhappy because AI is actually working, and no matter how much they say it’s “stupid” it keeps solving problems and getting adopted by businesses. AI optimists and accelerationists are unhappy because progress isn’t fast enough and being hampered by pesky things like politicians. Everyone else is unhappy because AI is harming jobs, the environment, and artists.

This means that AI is positioned to be uniquely unpopular for the foreseeable future for pretty much everyone except realists and forward thinking businesspeople.

In my experience, no one will ever concede they were wrong or update their beliefs. They just quit the field once the topic has moved on. AI will continue to diffuse through society, business, and politics, and one day it just won’t be the big scary new thing it is today. The question becomes: how much more tense will it get before the fever breaks?

I have a feeling that we’re coming to terms with what AI means—but that is a far cry from “through the worst of it” yet.

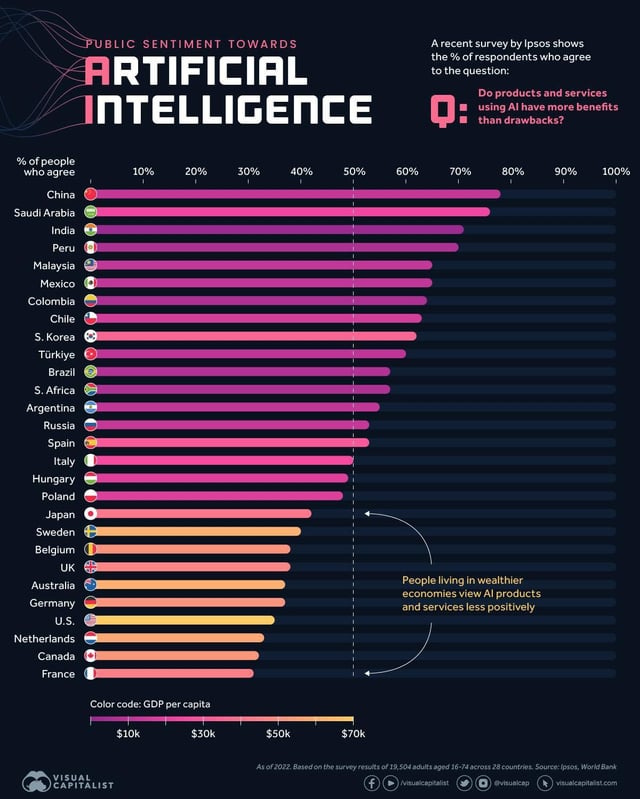

I wonder where Singapore would fall on that chart 🤔

Do you use AI to write your posts?