Cognitive Hyper-Abundance is Coming

GPT-5's release was disappointing to some people, but let's look at the data and forecast where this is going.

Benchmarks are all the craze right now, but as many people (including myself) point out: benchmarks aren’t everything. The real world is the real test. So what if there was a benchmark that was grounded in real-world outcomes?

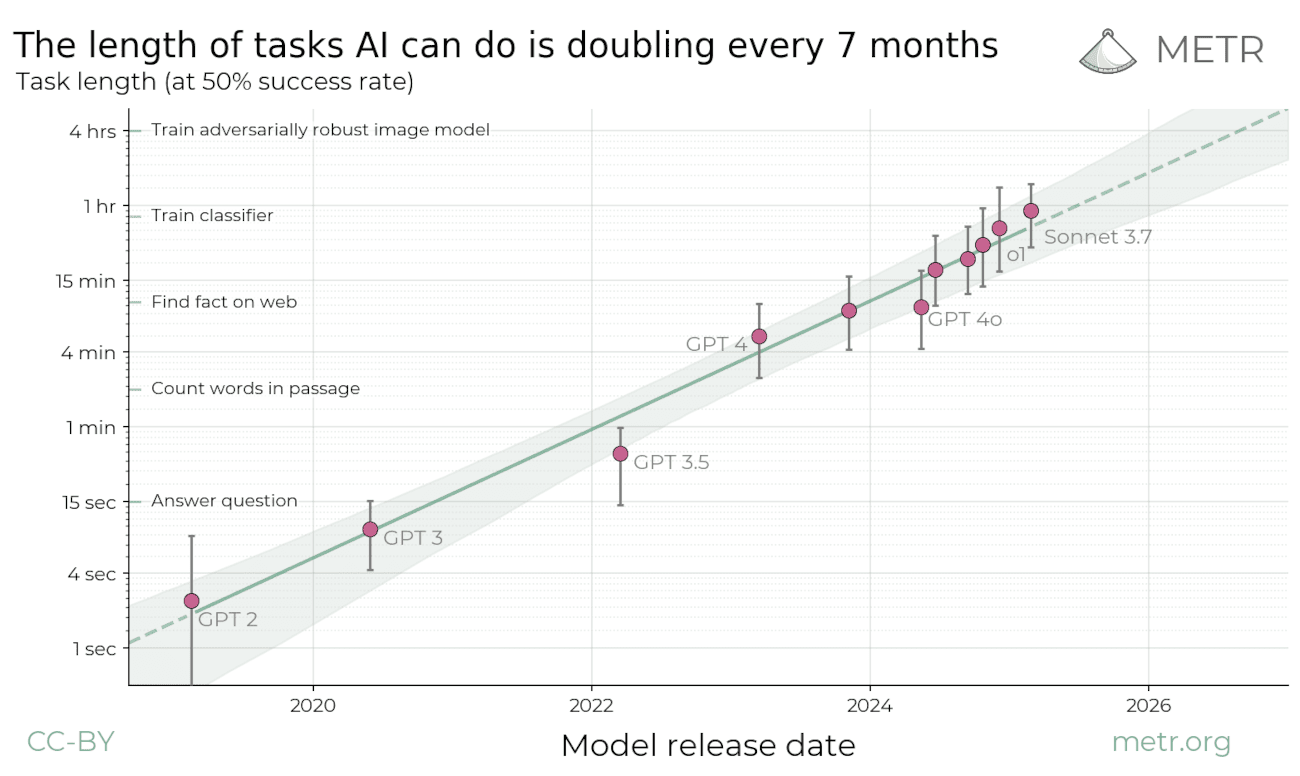

Enter the METR benchmark:

You can check out this source here: https://metr.org/blog/2025-03-19-measuring-ai-ability-to-complete-long-tasks/

Now, that looks like a nice, simple exponential curve. METR also renders this data as a log scale. They say it’s “doubling every 7 months” but does that really fit the data?

Here’s what I did:

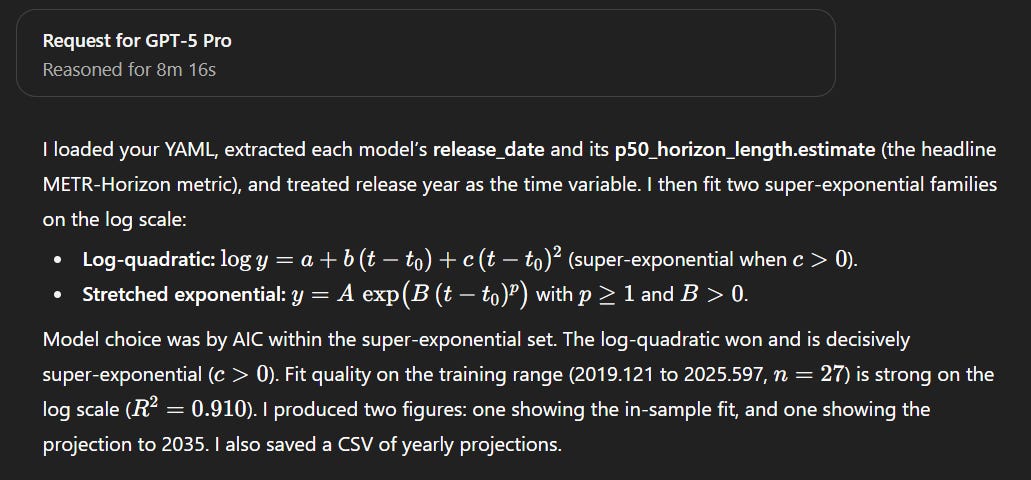

I downloaded the raw data from METR’s site and handed it to GPT-5-Pro and asked it to derive a super-exponential curve. Here’s what it said:

I loaded your YAML, extracted each model’s release_date and its p50_horizon_length.estimate (the headline METR-Horizon metric), and treated release year as the time variable. I then fit two super‑exponential families on the log scale:

Log‑quadratic: log 𝑦 = 𝑎 + 𝑏 (𝑡−𝑡0)+𝑐(𝑡−𝑡0)^2 (super‑exponential when 𝑐>0)

Stretched exponential: 𝑦=𝐴 exp(𝐵(𝑡−𝑡0)𝑝) with 𝑝≥1 and 𝐵>0

Model choice was by AIC within the super‑exponential set. The log‑quadratic won and is decisively super‑exponential (𝑐>0). Fit quality on the training range (2019.121 to 2025.597, 𝑛=27) is strong on the log scale (𝑅2=0.910). I produced two figures: one showing the in‑sample fit, and one showing the projection to 2035. I also saved a CSV of yearly projections.

(note, the formulas don’t render correctly on Substack, screenshot below)

So, what happens if this trend continues for any length of time? Many people (myself included) have been looking for “sigmoid curves” where we start to get diminishing returns. In point of fact, many people have interpreted the release of GPT-5 as a sign of “diminishing returns” but at least on the context of autonomously solving software challenges, GPT-5 is ahead of the curve.

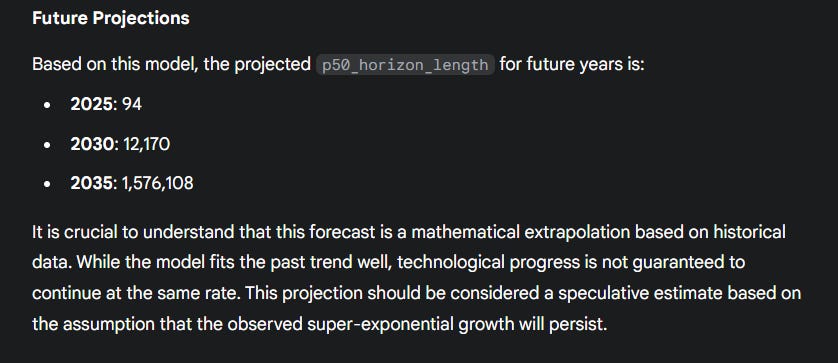

Here’s what this super-exponential looks like if we forecast it out:

So what does this mean? It’s difficult to wrap your head around this. If this trend continues for any length of time (and there’s no evidence of slowing down, if anything it’s still accelerating due to super-exponential fit) then we’re going to be entering into a new regime of machine autonomy that will knock your socks off within just a few years.

Below, I’ve shown you the data above, but in spreadsheet format so you can appreciate exactly what we’re looking at:

For context, 1800 hours of human work is approximately 1 calendar year of a full time job. 8 hours a day, 5 days a week. And by the beginning of 2029, it looks like AI will be able to “dual-shot” that. I know that’s not how 50% success works, but you get the idea.

GPT-5 just crossed the 2 hour threshold, so we’re at the very early stages of this takeoff.

When I use the term “cognitive hyper-abundance” this is what I mean—intelligence too cheap to meter—but not just “too cheap to meter” but so radically abundant that we really have no model for what this means. This would completely obliterate cognitive cycles as as any constraint to human endeavors. Cognitive limits, intelligence, mental bandwidth… these will simply not be bottlenecks on economic activity, intellectual pursuits, or scientific progress.

That begs the question: if we “solve” machine autonomy in the next few years, what are the consequences of this? What are the remaining constraints?

Physics, first of all, remains a frustrating constraint. No amount of IQ can rewrite the laws of thermodynamics or shorten travel distances or reduce mass. Steel will still melt at 2500°F, a joule will still be a joule, and so on. Gigantic instruments like LIGO and ITER and JWST will still take billions of dollars and many years to construct. That’s not to say that “super smart AI cannot help with logistics” but it does still come down to logistics.

This graph also assume frictionless growth from here, which GPT-5 said is “physically impossible” but I beg to different. As models become more sophisticated, multimodal, and more autonomous, I don’t see any reason why an AI in a few years couldn’t do one year’s worth of human work in an hour or so. They already “think” at super-human speeds and are only getting faster. Think about it; this is not just about raw parameter counts.

Parameters go up

Tool use goes up

Underlying hardware goes up

Reasoning becomes distilled and quantized

Data becomes synthetic

Better model architectures and software architectures

What we’re seeing in the METR data is the confluence of everything being poured into AI.

With all that being said, it’s worth looking at the error-bars. Here’s what Gemini barfed out:

I also gave this to Grok, and its first pass suggested we’d be at 50 quadrillion minutes by 2035. I asked it to check its work. For context, that’s about 95 billion years, or 7x the age of the universe. When Grok double-checked its work, it confidently arrived at 49 quadrillion minutes by 2035. Well okay then. When I asked Gemini to double-check its work, it revised itself up to 1.3 trillion minutes by 2035, or about 10x less than GPT-5-Pro.

The only thing I can say about this is:

If these trends continue for any length of time, then we are on track to have, for all intents and purposes, solved machine autonomy within the next decade.

That may seem like a boiler-plate assertion, but I think that it bears repeating: we really have no way of forecasting the impact this will have on economics, human endeavors, or military strategy. AI’s clock speed will be limited by the time-step function of physical reality, not brainpower.

What will it be like to live in a “solved” world? I have some guesses, but they are just guesses:

Biology will be solved pretty quickly. Biology is highly complex, granted, but it is not magic. It’s all based on the building blocks of elements and molecules.

All of math and AI will hit compounding returns. AI is just math. Math underpins all of STEM. Computer science will functionally be “solved” as well.

New Grand Unified Theories of Everything will emerge for physics. Einstein spent about 8 to 10 years working on his General Theory of Relativity. Assuming he put about 1000 hours of work into his theory or adjacent work with spillover effects every year, that’s 8,000 to 10,000 hours of deep math work. If my math is right, then AI should be able to one-shot that by 2030.

Intelligence and information arbitrage will disappear for business, government, and military. Much of business today is predicated on alpha—discovering efficiencies that others have missed. Cognitive hyper-abundance on these scales basically erases that as a possible vector. Likewise, any military that is using these advanced AI’s will have won-via-simulation before the battles even start.

I can see what some people, namely AI “x-risk” people would see these numbers and panic—the level of uncertainty climbs as AI becomes more powerful. The “margin of error” for mistakes, ostensibly, drops. But that assumes that these new models will just fall out of the sky, untested, and that the world will have no time to adapt. Even by simply publishing this blog post, forecasting an eventuality that may occur in 5 to 10 years gives us plenty of runway to adapt.

All I can say with any level of confidence is that “cognitive hyper-abundance is coming” but as for what that entails, the rest is just guesswork.

Agree with everything except the physics part, einstein's work on GR was less of him doing math and more of his ability to think bizarre yet correct ideas. Hilbert completed einstein's math faster than einstein. My point is math is not necessarily that important to huge discoveries in physics. Yes it's important but not the core for leapfrog ideas. Alot of time exotic math don't conform to our reality and many theories can't even be physically tested, on top of that logic doesn't seem to make sense in quantum mechanics.

When I was a kid 2025 was the year of the singularity. If this article doesn't confirm that date I don't know what would.

That being said: Remember The joke at that start of COVID that was an exponential graph that read: "Time spent looking at exponential graphs"? If the curve continued we would all be dead by the end of 2020 or something. Exponentials have a way of rolling off in the real world.

This is showing that the acceleration in accelerating if my monkey brain understand it at all. Even if the acceleration slows we still have no idea where we will be by the end of it.