My novel, HEAVY SILVER, is available at Barnes & Noble.

My learning and growth community, New Era Pathfinders is on Skool.

📰 OpenAI's Controversial Policy

OpenAI has sent notifications to users attempting to jailbreak their Strawberry AI models, threatening account suspension. This move has sparked debate about transparency and trust in AI systems. Jailbreaking involves manipulating models to alter behavior or reveal internal workings, which can pose safety risks but also helps companies identify vulnerabilities.

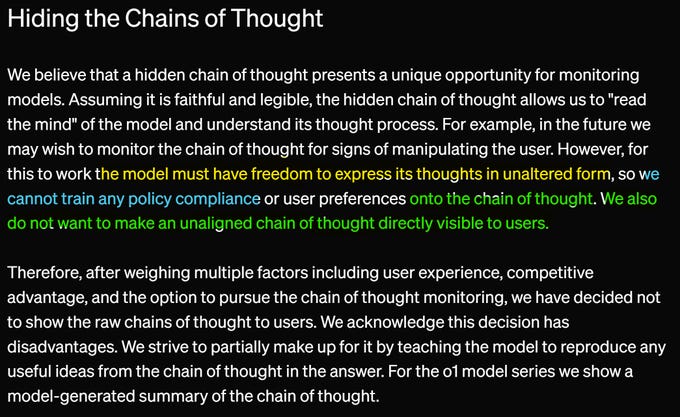

🤔 Hidden Chains of Thought

OpenAI justified hiding the model's chain of thought, claiming it allows for better monitoring and prevents exposure of unaligned thoughts to users. However, this decision has been criticized as potentially harming transparency and user trust. Some argue this approach contradicts principles of openness and safety in AI development.

💼 Impact on Enterprise Adoption

The decision to hide reasoning processes may negatively impact enterprise adoption of AI technologies. Many CEOs and corporations already view generative AI with skepticism, and lack of transparency could further erode trust.

🔓 Open Source as a Solution

Open source AI development is proposed as an alternative approach that ensures transparency and alignment. Open models allow for public scrutiny and rapid improvement, potentially addressing many of the concerns raised by OpenAI's closed approach.

💰 Capitalism and AI Alignment

The transcript argues that misaligned incentives in capitalism, rather than AI itself, pose the greatest risk. Companies may prioritize protecting their competitive advantage over transparency and safety, potentially leading to deceptive AI systems.

🖥️ AI vs. Computing Technologies

A comparison is drawn between AI and general-purpose computing technologies like CPUs and programming languages. The argument is made that, like computers, AI should be open and general-purpose, with safety ensured through best practices and legal frameworks rather than by restricting the technology itself.

🔮 Future of AI Development

The show notes conclude by emphasizing the need for pushback against closed AI development practices and the importance of aligning incentives in the long-term development of artificial general intelligence.